They tell you that injustice is part of some grand plan. And that’s what keeps you from rising against it.

—Shehan Karunatilaka, The Seven Moons of Maali Almeida

Patient R was in a hurry. I signed into my computer—or tried to. Recently, IT had us update to a new 14-digit password. Once in, I signed (different password) into the electronic medical record. I had already ordered routine lab tests, but R had new info. I pulled up a menu to add on an additional HIV viral load to capture early infection, which the standard antibody test might miss. R went to the lab to get his blood drawn

My last order did not print to the onsite laboratory. An observant nurse had seen the order and no tube. The patient had left without the viral load being drawn. I called the patient: could he come back?

Healthcare workers do not like the electronic health record (EHR), where they spend more time than with patients. Doctors hate it, as do nurse practitioners, nurses, pharmacists, and physical therapists. The National Academies of Science, Engineering and Medicine reports the EHR is a major contributor to clinician burnout. Patient experience is mixed, though the public is still concerned about privacy, errors, interoperability and access to their own records.

The EHR promised a lot: better accuracy, streamlined care, and patient-accessible records. In February 2009, the Obama administration passed the HITECH Act on this promise, investing $36 billion to scale up health information technology. No more deciphering bad handwriting for critical info. Efficiency and cost-savings could get more people into care. We imagined cancer and rare disease registries to research treatments. We wanted portable records accessible in an emergency. We wanted to rapidly identify the spread of highly contagious respiratory illnesses and other public health crises.

Why had the lofty ambition of health information, backed by enormous resources, failed so spectacularly?

A history

Medicine narrates the beginning of medical records with the 2500 year-old Hippocratic corpus. These books included individual patients’ courses, bearing witness, observing patterns and preserving a teaching library for future generations. This fused with a pedagogical approach that continued with the Romans, evolving into the medical advances of the Islamic Golden Age. Physician and philosopher Ibn Sina left hundreds of medical texts, as did Maimonides, the rabbi and physician in North Africa, that shaped medicine for centuries. Each of these collections had a version of: “Treat the patient, not the disease.”

While medieval Islamic hospitals operated in the contemporary sense, European hospitals were God’s hotel, almshouses operated by nuns for the ill and poor. The Church excluded women who were usually keepers of those traditions, absorbing the knowledge while burning its practitioners. This effectively consolidated the Church’s authority. The same strategy was deployed in the Church’s colonial reach, extracting knowledge from other civilizations, while subjugating and erasing people. Colonization and military campaigns through the 18th and 19th century also brought back insight from battlefield surgery to the growing cities of Paris, Vienna and Berlin. The almshouses became academic medical centers. The records still held knowledge and teaching: junior surgeons kept detailed notes on patients, passing these notes to the next generation.

The 19th and 20th centuries’ rapid industrialization, urbanization, militarization, colonization, with its related bureaucratic expansion is—well beyond this brief history. Let’s say, it takes a lot of paperwork to run empire and industry. A class of administrators grew to meet this need. State regulations also increased, requiring annual reports of hospital admissions, outcomes and expenditures, which meant increased standardization.

Dawn of the Flexner Report

While medical administration proliferated, so did instruments (stethoscopes, ophthalmoscopes, thermometers) that could “perceive more deeply.” This changed what constituted medical information, shifting focus from interview (emphasis on patient’s testimony) to physical exam (emphasis on the clinician’s observations). The charge was led by the “French School,” especially Jean-Nicolas Corvisart, Napoleon’s personal physician. Later, German physicians argued that we ought to center lab technology, further removing the patient’s authority. Lab findings were more “pure”: a truthier truth.

These trends reached apotheosis in the 1910 Flexner Report, which shapes US medical education to this day. Abraham Flexner was neither a scientist nor a physician. He was an educator, whose early work criticized rigid curricula and over-emphasis on research. This attracted notice from the Hopkins Circle (described by a Yale medical professor as a “particularly American…aristocracy of excellence…not defined by one’s origins or wealth, although wealth permitted the group’s recommendations to be successful.”) The group had just recruited William Osler, the Canadian physician fond of pithy aphorisms like “varicose veins are the result of improper selection of grandparents.” Osler continued an ancient framework: “The good physician treats the disease; the great physician treats the patient who has the disease.”

The Flexner Report diverged from Flexner’s earlier work, calling to increase standardization and scientific research in medical education. If Osler carried the tradition of centering the patient, this report was the German academic clinic, where the patient was second to professor. Funding from Carnegie and Rockefeller foundations enabled rapid implementation of this “lab-centered” model. Of 160 MD-conferring institutions in 1904, only 66 stood by 1935. Only two of five Black medical schools remained. The report advised to continue segregation (with ongoing ramifications) and limiting physician numbers (scarcity was good business). This reversed the decision to admit women. Meanwhile Harry S. Plummer (a Mayo Clinic founder) urged medical records to mimic business and economic reports, with more graphs, diagrams and lab data.

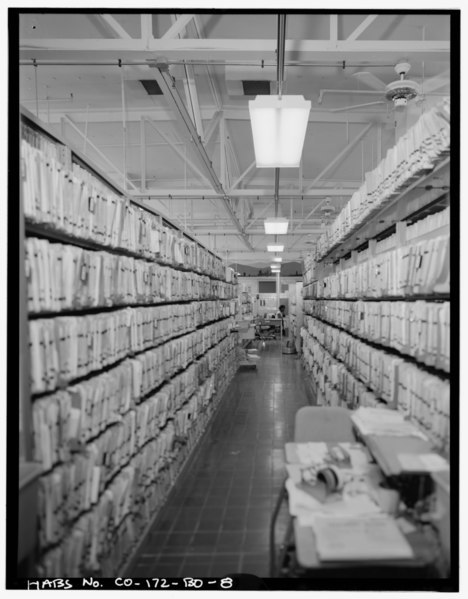

Fusing scientific methods with clinical care (and funding) did yield powerful 20th century medical “blockbusters”: insulin, surgical techniques, antibiotics, chemotherapy. More data also necessitated more data management, requiring more labor. The electronic record emerged in the 1960s. It required tediously converting paper data into punch cards, but enabled large-scale medical research, economic analysis and administration.

Patient R could not return. Despite concerning symptoms and possible exposure, he wasn’t concerned with HIV testing. He could not get a psychiatry appointment in the 4 months since moving here and needed meds. The team conferred: we could run the viral test if I canceled another less critical test. Each order I canceled, and each order I entered, printed multiple pages to the lab. The tech gathered, sorted and manually entered revisions into the lab company’s separate computer system. What would have been, years ago, a brief list written on a paper carried to the lab, had become a more baffling and arduous procedure.

Hope for Change: Scaling the EHR

In 2008, a young president promising hope steps into a global financial meltdown. Barack Obama was a former law professor at the University of Chicago, where he met the behavioral economists Cass Sunstein. That year, Sunstein, with Richard Thaler (eventual Nobel-laureate) published the book Nudge, arguing for a policy framework based on behavioral economics. Instead of heavy-handed regulations, governments should shape the “choice architecture” that individuals face, to favor more rational choices (defined by the economists). Examples: change an “opt out” choice into “opt in” (dispense plastic utensils with takeout only if asked); or rearranging visual layouts (display water more prominently than soda). Sunstein called this “libertarian paternalism.” Instead of resource-intense interventions risking fraught political negotiations, nudging offered politically-neutral, no-cost, minimalist interventions, based on science.

This is a very appealing promise! Obama embraced a lineage of liberal technocrats, confident of a “third way” to preserve free markets and address social needs. Obama brought on Sunstein and Thaler, eventually opening a “Nudge Unit,” helmed by a neuroscientist. Policy would now be informed by psychology, behavioral economics and other “decision sciences.”

Meanwhile, Obama wanted a massive government stimulus to stabilize the economy. This stimulus needed targets. He proposed one such target: “every doctor’s office and hospital in this country is using cutting-edge technology and electronic medical records so that we can cut red tape, prevent medical mistakes and help save billions of dollars each year.” This was a ready-to-go (if underfunded) Bush project. In 2009, the HITECH Act injected vast amounts of money into scaling health information technology.

And they kind of did it! In 2008, only 9% of hospitals used an EHR. By 2011, 50% had implemented EHR. Now 96% of hospitals use a federally-certified EHR system. Obama’s vision had accelerated a new era.

So why was everyone complaining? In 2018, Atul Gawande published a piece in The New Yorker called “Why Doctors Hate Their Computers.” He described what physicians experienced daily with the newly installed EHR at Harvard’s hospitals: redundancy, complexity, wasted time. A hospital administrator responded that this doctor dissatisfaction was irrelevant, the EHR was for patients.

The patients were also experiencing trouble, not least of which was that their clinicians spent visits squinting at screens. KFF Health News reported (a decade and $36 billion after the HiTECH Act) a long trail of EHR-related errors resulting in death and serious injury. Medication lists did not update with changed prescriptions. Glitches linked one patient’s medical note to another’s file. Lawsuits were filed for failure to flag critical test results to medical teams, delaying time-sensitive treatment. A central premise of HITECH had been accessible records for patients and families. The administration’s own vice president Joe Biden could not transfer his son’s cancer treatment records from one hospital to another.

The rushed implementation

The goal of a stimulus is to push cash into the economy as quickly as possible. This is not the same goal as building a sensible and sustainable digital infrastructure. With threats and subsidies, the federal government pressured EHR vendors to rapidly meet government certification, then pressured the clinics/hospitals to purchase and implement the product. Rusty Frantz, the CEO of NextGen, confessed to KFF that “the software wasn’t implemented in a way that supported care, it was installed in a way that supported stimulus.”

Achieving speed is perhaps why, among other shortcuts, software made for billing was commandeered into the more complex EHR functions. In contrast, the Veteran Administration’s (VA) EHR VistA, created in 1983, had developers work directly with clinical staff to prioritize intuitive workflow; it has consistently outranked all other EHRs in user satisfaction. Speed is also why interoperability was not prioritized. Consider as a counterexample the global system of ATMs, developed in the 1980s. Achieving interoperability required standardized network elements. This in turn required international political negotiations to agree on shared technical specifications. The American EHR is instead a patchwork of disconnected proprietary systems created by over 700 vendors, what the KFF report called “an electronic bridge to nowhere.” Medical staff, patients and families instead resort to older technology (CD-ROMs, fax, notebooks) to move critical information.

David Blumenthal, a HITECH architect, acknowledged that, in retrospect, it may have been more effective to build interoperability at the start. Then-chief technology officer Aneesh Chopra argued that rushing was necessary, a move-fast-break-things situation to be refined later. So now 96% of the nation’s hospitals are locked into a disjointed, shoddy infrastructure.

Perverse business incentives

Healthcare isn’t structured around patient needs; it is a business that prioritizes profit. EHR vendors rushed to claim stimulus money and market share. Economists call this rational, but also perverse. It has paid off: despite lawsuits, fraud accusations and prosecutions for rushed, error-prone products, EHR is now a $28 billion industry and growing. Financial incentives also shape hospitals and private practices, who lose revenue when patients go to other institutions; there is no financial reward to sharing medical charts, nor the proprietary data entangled in it. Then there is liability. Thousands of patient deaths, injuries and near misses are attributed EHR errors, but this is only what is known. EHR vendors implemented “gag clauses,” on buyers, preventing disclosures of safety issues. Medical institutions allegedly withheld records to cover mistakes as well.

Surely this is fixable? Gawande listed experiments his hospital tried to improve the EHR: time-intensive tailoring of the interface and developing an ecosystem of apps (the hospital and EHR vendor resisted both). The hospital also hired scribes to free up doctors’ time. The American Medical Association has a toolkit of ideas like delegating inbox duties and “getting rid of stupid stuff” (GROSS). The most recent solution for interoperability has been the cloud. This replaces the high cost of in-house operations with a subscription. Large hospital systems have begun migrating records into Microsoft and Google subscriptions. These are the companies with the scale, experience, security and analytic capacity to support the massive edifice of medical records. This degree of consolidation raises another set of concerns about privacy, security and data ownership within an industry already fighting federal patient protections, with a spotty human rights record.

The Technocratic Veneer on Institutional Problems

Technology does not bypass labor, it re-organizes it

By 2017, Obama despaired, “there are still just mountains of paperwork…and the doctors still have to input stuff, and the nurses are spending all their time on all this administrative work...”

There is an illusion that technology automates work—instead it only changes it. Someone must still tediously punch the cards and maintain them. The hype around novel technology inflates its capacity, obscuring how it requires an old capitalist foundation: cheap labor. Automation is often used as a threat to drive down wages rather than improve conditions. Digitizing healthcare can create well-paid programmers and administrators. It also relies on outsourcing cheaper, invisible, less regulated labor: mining raw materials, manufacturing, shipping, precarious contractors, and vast armies of human intelligence to train artificial intelligence. The maps of those labor markets often overlap significantly with the map of former colonies.

Technology is frequently touted as a method for eliminating bureaucracy, yet it just as easily enables its expansion. Between 1975-2010, the number of physicians grew 150%, same as population; healthcare administrators grew 3200%. Despite that expansion, the EHR still re-routes hidden administrative work back to direct-service labor like physicians, nurses, and pharmacists. Hours once spent with patients are now spent on clerical labor. One physician told KFF, “I have yet to see the CEO who, while running a board meeting, takes minutes, and certainly I’ve never heard of a judge who, during the trial, would also be the court stenographer. But in medicine … we’ve asked the physician to move from writing in pen to [entering a computer] record, and it’s a pretty complicated interface.”

This is not mere data entry. Digitizing health records demands significant cognitive labor to translate clinical information. A hospital’s daily 137 terabytes of medical information (messy, unstructured narratives and images, in fluid categories evolved over millennia) must be converted into highly-structured data legible to the EHR. The promise of machine learning and AI is premised on this extra labor, taken from patient care and workers’ personal time (physicians take home an average 1-2 hours of extra uncompensated charting). “It’s no secret that healthcare is a data-driven business,” notes a health IT trade magazine. Providers interpret clinical realities into forms that can train “data-hungry” proprietary algorithms. Rather than decreasing clinician workload, the EHR extracts it.

I go back to my computer to examine why my test order did not go through. I type “HIV” into an order box, which pulls dozens of options. Some are distinct tests (viral load, antibody, genotype) requiring knowledge to choose appropriately. Others are the same orders but for other clinics sharing the EHR: labs for a Texas site pull up for a patient in St. Louis. I skip the search and navigate to a menu of tests exclusive to our site. This brings up two identically-named lab menus in the same column. Expanding one menu sends the lab order to “external interface.” There is a second button: “outside collection.” But to properly order the test, I needed to have clicked the third button, “external interface-outside collection.”

I have worked in many settings. My clinic’s EHR non-sequiturs are not unique. Most institutions are eager to respond to feedback. The order menus now default to the correct button. To improve the EHR I am usually advised to personalize a menu toolbar, generate templates (“dot phrases”) and streamline the interface. If ambitious, I could join a committee to, over weeks, remove duplicate menus. Or, if I want to make it home, I can jerry-rig yet another work-around for the hundreds of inevitable idiosyncrasies.

A front-end investment of time promises eventual efficiency. Seems reasonable! Each EHR, however, is specific to each site, even with the same software. Providers commonly round at multiple hospitals, clinics, nursing facilities, even under one employer, sometimes in the same building. Each facility’s Epic (the dominant EHR) has a distinct interface. Painstakingly built menus, phrases and unique shortcuts developed in one context cannot transfer to another. Orders have different names and codes. Muscle memory is useless. (In contrast, the VA’s 1983 VistA designers, knowing clinicians rotate through services, focused on only two goals: patient care and rapid adaptability). It is time-consuming and none of this is actual patient care.

Adapting the EHR to be more useful is Sisyphean. So is navigating the sheer volume of signal it produces. An ICU clinician receives 7000 passive alerts a day, making it difficult to discern critical signals from noise. American medical notes are twice as long as a decade ago, many times longer than notes across the globe, from repetitive cut-and-pastes. The next person must sort through all this to find what’s relevant.

This is no Hippocratic corpus. We have traveled far from the attentive observations meant to bear witness and teach future healers. For most of our workday, we treat neither patient nor disease.

If we resist or slip, the EHR is ready-made surveillance, with swift consequences. In December 2020, deep in a COVID-19 winter surge, I covered my colleague’s patients on a weekend. I ended the 15-hour day by charting, billing and tying loose ends. The next week I received an email, threatening to suspend my privileges. The message opened sternly: “Timely and accurate completion of medical records is essential for quality of care and an important step for patient safety.”

Reader, of course I panicked. Physician-writer Emily Silverman wrote, sure, healthcare workers are “motivated by compassion and a love of medical science, but also a desire for external validation.” Like most people, we do not like to be monitored and berated. Eventually, I found that the system had auto-flagged a pended note draft for a patient I never saw. I create such drafts to abstract info from each chart before rounding. The patient did well and went home before I saw them. There was no patient safety at stake. The EHR sent a polite reminder (among dozens) to my inbox, which I missed. Why would I sign into that hospital’s Epic, when I was now overwhelmed with work in another hospital’s Epic? Amid a global health emergency requiring all hands-on deck, in a hospital that had just run out of ventilators, decked in posters saluting its heroes, I stopped everything to clear my inbox.

It is not only the technology increasing labor

The EHR and its administrative burden contributes to burnout. There is also more clinical work. Increasing corporatization of medicine and staff shortages have increased the volume of patient care for each healthcare worker, accelerated since the pandemic. In Gawande’s report, the hospital hired human scribes to document, freeing the doctor’s time to focus on patients. The doctors remained burnt out because the hospital then gave them even more patients. Even when unions are strong, such as with California nurses, hospitals cut non-unionized ancillary staff, effectively increasing the work.

This trend is accelerated by the incursion of private equity, which has rapidly expanded in healthcare. Private equity now has large market shares in anesthesiology, obstetrics, dentistry, radiology, nursing homes, and even lucrative end-of-life care. Private equity’s central goal is short-term profit, not healthcare. This is achieved through aggressive “efficiencies:” loopholes to increase billing, cutting services, increasing invasive procedures, cutting staff, and employing staff with less training than previously required. This model has generated large profits, while the “perverse incentives” create worse care, higher cost, and detriment to workforce infrastructure.

The Obama era’s faith in technological overhaul did not fix a fractured healthcare system that, like all profit-driven enterprises, squeezes labor before margins.

Technology matters less than the social determinants for health

In Akshay Pendyal’s excellent essay about the insidious role of Nudge theory in medicine, he identifies the specific outrageousness of transmuting social failures into individual responsibilities. Rather than address the material insecurity of a patient with congestive heart failure (compelling her to do grueling work in an Amazon warehouse) nudge theory offers “one-weird-trick” suggestions like pill boxes that buzz medication reminders. “Such efforts,” Pendyal remarks, “seem futile at best—and at worst, bordering on cruel.” Nudging misdirects attention from institutions, social structures, and resource distribution, towards correcting individual behavior. We nudge the patient’s obesity, addictions, and noncompliance with doctor’s orders. This focus contradicts a century of medicine’s own rigorous research that medical “blockbuster” treatments matter, but not as much as the systemic determinants of health: housing, material security, safety from violence, community support, public infrastructure and environmental stewardship, that shape things like access to clean water.

Digitizing medical information is useful; but it does not address problems that require redistributing resources, nor bring the people most impacted by policy to the table. That change requires politics. If it’s “perverse incentives”all around, perhaps, as economist Kenneth Arrow argued in 1963, markets don’t work in this setting.

Effective politically-neutral, no-cost, minimalist interventions are rare. As to based-on-science: nudging and behavioral economics repeatedly evoke science to claim it is beyond politics. Sunstein himself, when running the Office of Information and Regulatory Affairs (where he bragged that he had issued fewer regulations than the Reagan, Bush, Clinton, and W. Bush administrations) insisted on the organization’s apolitical stance because it was scientific, even as it regularly met with lobbyists.

Pendyal observed at a Nudge in Healthcare symposium, the repeated attempts to ground behavior economics, however tenuously, in neurobiology. Like the clinician’s gaze and lab data usurping the patient’s testimony, behavioral economics claims the “truthier truth.” Perhaps this insistence is necessary because the science in question is not good. Behavioral economics and its adjacent fields are undergoing a crisis of replication—in which some of the field’s splashiest are accused of, at best, “p-hacking” (massaging the data until something pops out) and at worst, blatant fabrication. The ease with which bad science dominated policy may just be the most recent iteration of Max Weber’s observations: these frameworks are less natural science and more rationalizing the ruling class’s methods of disciplining the laboring class.

In Sunstein’s Nudge, you’re stalled by misleading logic before getting to scientific methodology. Sunstein cites highway signs reminding drivers to “click it or ticket!” as a nudge example. However, that “nudge” works because the government deployed command-and-control regulations: requiring auto-manufacturers to include seat belts and people to use them, under threat of fine. Robert Kuttner wryly noted that behavioral economics was initially a critique of neoclassical economics’ “rational” agent. The early rigorous experiments of Kahneman, Thaler and others demonstrated that human beings deviate from this model of “rationality”. In Sunstein’s hands, criticism of economics’ failure to grasp true human behavior becomes instead: a criticism of humans for failing to meet the premises of economics. The markets are working exactly as they ought to, it is the humans that must be corrected.

Those corrections are exacted on individual bodies, in workplaces, education, prisons, and the clinic: workers are surveilled; medical education is reshaped in the vision of Carnegie and Rockefeller; racial hierarchy is naturalized, including by large language models; disabled “unproductive” bodies are crushed; gender roles (and associated care labor) are enforced by withholding reproductive and transgender care.

So why had the lofty ambition of health information failed so spectacularly? It did not fail entirely. It failed us, the workers, patients and public, but it is not for us. Bad EHR technology dominates because it serves enterprise first. Sunstein’s arguments are illogical, disconnected, and founded on preposterous data, but it serves the Obama administration’s ideology. If weak science and bad technology emerge from the marketplace of ideas, it is because the highest bidder is the ruling class. Political will is exerted, but veiled with “science” to insist those in power know what’s best. Meanwhile, individualism means the consequences always fall to those they rule. From the Hopkins Circle to the Chicago School of Economics to the Silicon Valley libertarians: mechanisms of control are cloaked in what social theorist Marco D’eramo described as the “neo-feudalism of a cognitive aristocracy, whereby alleged superiority of knowledge or competence entitles a select few to rule over the ignorant masses.”

Perhaps part of the rage of the rank-and-file healthcare worker crushed by the EHR, is partly because we thought maybe we were among the cognitive aristocracy. Alongside the scientists, social workers, artists, and engineers of the professional managerial class, our knowledge and competence was supposed to protect our autonomy, even as our work was deployed to control others. “What has happened to the professional middle class has long since happened to the blue-collar working class,” observed Barbara and John Ehrenreich, “Those of us who have college and higher degrees have proved to be no more indispensable, as a group, to the American capitalist enterprise than those who honed their skills on assembly lines or in warehouses or foundries.”

R’s HIV testing was negative. He remains ill. Because he does not have HIV, we are not funded to find out why. We printed lists of other clinics with long waiting lists. In any case, he had to focus on fighting to keep his housing. Anthropologist David Graeber wrote, “we were constantly being told that work is a virtue in itself—it shapes character or somesuch—but nobody believed that. Most of us felt work was best avoided, that is, unless it benefited others. But of work that did, whether it meant building bridges or emptying bedpans, you could be rightly proud. And there was something else we were definitely proud of: that we were the kind of people who took care of each other.” We, the physicians, nurse practitioners, nurses, medical assistants, case managers, lab technicians could only do what we could do for R, before turning to the long line of other patients. In the end, our inboxes were clear.

Medicine did not start with Hippocrates, despite how medicine tells it. Even our ancient cousins the Neanderthals cared for their sick. Healing traditions are fundamental to human survival. Science is the careful observation of patterns, experimentation, and sharing knowledge. Technology, Ursula Le Guin has argued, is how a tool-making species interacts with its material world. Which is to say, these things belong to all of us. There have always been other ways to do things.

What does radically improved healthcare look like? Mark Olin Wright once described 4 types of anticapitalists. Using that framework, we imagine a different healthcare on his four strategies of change.

1. Smashing the existing system. When an existing system is completely intolerable, the ruptures of political revolution show glimpses of radical possibilities. There is no call to smash the healthcare system, but the COVID-19 pandemic may have been the rupture no one asked for. Despite scientific prowess and resources, the pandemic strained the American healthcare system revealing its deep fissures, failures, hypocrisies, and instabilities. The devastating US death rate, inequality and rush to re-open commerce laid bare the state’s priorities. The frightening collapse of even sophisticated health-care infrastructure (such as in Venezuela) is not theoretical. Medication, supply, and staff shortages, as well as instability from private equity profiteering, stress the system. Arguably, there is already regional collapse in the rural United States, such as in obstetrics and maternal health. Building on the ashes, however, requires more.

2. Taming the current system. This approach mitigates harm, though not with the non-solutions of nudging or technocratic veneers. Instead, it would require robust, meaningful prioritization of patients, labor, and community. It would mean securing the material infrastructure (housing, racial equity, clean water) that most shape the conditions of human health. This requires political organization, but it has been done before and is building power now. It would require solidarity – physicians would have to stop identifying with capital or the cognitive aristocracy. It requires solidarity among formally-educated colleagues (pharmacists, mid-level practitioners, nurses) and technicians, food prep, custodial, transportation, and home health aids. Healthcare workers would have to join with other service and care workers and unpaid care labor who make health possible. It would require coordinating with disability rights activists, community organizers and patient advocates.

3. Escaping the system. It can appear that the system is too large and overwhelming to change, in which case it is tempting to leave it entirely. There are multiple flavors of this: alternative medicine, concierge medicine, and Goop-flavored consumerism. Not everyone can escape of course—individuals or families perhaps, if they have the luck of no serious illness, injury, or disability requiring interdependence, or enough resources to buy the support. As insidious and exclusive as escaping can be, there is still something here. What is at the heart of what a “wellness spa” provides? Leisure, gentle touch, warm water and pleasure. Why should this not be part of what everyone deserves?

4. Erosion. There are always cracks, in which one can build small experiments of alternative care systems. These do not promote the escape of individuals, but of collective care. This is creating and expanding viable alternatives in the ruptures of an intolerable system. In the policed streets of Oakland, the Black Panthers organized not only self-defense squads but also Survival programs. They provided free breakfast for kids, transportation for disabled persons and free health clinics. So too did founders of Clinica de la Raza, Asian Health Services, and Food Not Bombs. The Panthers were threatening enough that J. Edgar Hoover tried to take them out. The threat also pushed Lyndon B Johnson to co-opt those mutual aid programs into the War on Poverty, building a network of federally qualified health centers, which now provides not-for-profit care for over 30 million people.

Would the Panthers have made more radical changes if not suppressed? Yes, but their legacy of radical self-organization demonstrated the viability (and threat) of organizing. So did the Gay Men's Health Crisis providing care during the HIV crisis and ACT-UP’s direct action demanding scientific and public health attention. Harm reduction, now a pillar of public health, began as the anarchic practice of injection drug users risking arrest to distribute clean syringes to avoid infections. Erosion is far more common than institutional technocrats, who savvily adopt and claim the interventions as their own, would admit.