“AI + X = 2F,” reads the banner. “2F,” it turns out, stands for “Future and Force”: not so much a logically coherent formula than an expression of faith. Beneath it, suits, shirts, and T-shirts have gathered for AI Expo Korea 2023, one of many such events in Seoul today, where artificial intelligence has seamlessly replaced the “Fourth Industrial Revolution” as the future du jour. Onstage is a representative from Naver, a local tech giant, previewing their own large language model (LLM). South Korea must seize “AI sovereignty,” they argue, by jumping on board the generative AI train before it’s too late.

For modern South Korea, such claims echo a deeply familiar logic of “catch-up innovation.”1 The call for AI sovereignty takes for granted that the current wave of LLMs (large language models) and generative AI from Silicon Valley, led by text/image generation tools like ChatGPT and Midjourney, are the inevitable next step in the march of technological progress—and that everyone else must “develop” toward this predetermined future. The next speaker, the CEO of a Korean firm that offers machine-learning services for factory-line automation, cautions that “80 to 90 percent” of efforts to adapt AI into real-life workplaces fail. Yet, here, there is no questioning the general benefit of deep learning techniques—only the question of how Korean businesses and entrepreneurs might win or lose in a game of development whose latest rules have already been determined elsewhere.

What does the Californian technofuture look like from across the ocean? In South Korea, familiar models of modernization as “catching up” reinscribe Western technological myths as an inevitability, within which imitation is presented as the only rational response. Such ways of thinking preemptively marginalize very real currents of doubt, disbelief, and experimentation within South Korea, stripping away the time and space for asking: What could, and should, “AI” look like beyond the Valley and its narrow, repetitive projects? These tensions played out in two simultaneous events one May afternoon in Seoul. At the AI Expo, in the heart of Gangnam district, participants are treated to a dutiful reproduction of American AI mysticism and narratives of catch-up innovation; elsewhere in the city, in a secluded former post office turned art center, conversations reflect fleeting hints of the friction that technology and its myths work so relentlessly to disavow.

Same Old Progress

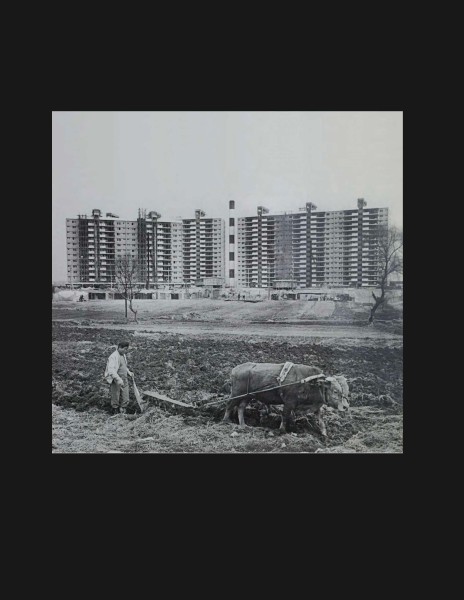

The AI Expo—boasting over two hundred booths and nearly thirty thousand visitors for its 2023 edition—had set up at COEX, a sprawling maze of shopping areas and exhibition halls. The choice of location was fitting: COEX looms large over Gangnam district’s Samsung-dong neighborhood, one of the epicenters of Seoul’s urban modernization over the last half century. Well into the early 1960s, Samsung-dong and its surrounding areas remained largely undeveloped and were known for unkempt reed fields, mulberry trees, and tranquil fishing spots. What happened next is remembered today as the legend of Gangnam: a state-led, hyper-accelerated transformation of over eleven square miles of rural landscape into a “future city,” resulting in its status today as the heartland of South Korea’s nouveau riche. (A bronze statue of the rapper Psy, in his iconic “Gangnam Style” pose,now adorns one of COEX’s many entrances.) Today, Samsung-dong is again under construction: a two-thousand-foot-long “underground city” connected to COEX promises to drive population density and real estate prices ever higher.

COEX itself was originally built in 1979 to support Korea’s increasing interest in hosting prestigious international events as spectacular markers of progress. The venue has hosted G20 economic forums and nuclear security summits; in 2022, it was also the site of Association of Computing Machinery (ACM)’s conference on Fairness, Accountability and Transparency, one of the most prominent international conferences for AI ethics research. Almost exactly a year later, the same meeting hall was now hosting the speakers from Naver and the domestic tech industry. From ethics conference to trade show, computing’s myths are circulated and legitimized in exactly these kinds of colorless, frictionless, interchangeable spaces where Palo Alto as may well be Seoul.

Such spaces don’t simply circulate technical processes but help entrench Silicon Valley’s particular mysticism, in which algorithms and AI are imagined to reveal deep, universal secrets of the world through their nonhuman objectivity. In what has been called an “enchanted determinism,” the counterintuitive and opaque workings of deep-learning models are frequently leveraged by techno-optimistic arguments as proof that technology is a superhuman force of progress.2 This teleological imaginary of technology is deeply rooted in America’s long flirtation with eschatology. In its modern, secularized form, eschatology does not necessarily involve a divinely ordained apocalypse but focuses on radical disruption and subsequent redesign of the status quo.3 Indeed, the word “technology” itself came into its modern meaning around the 1840s by riding this wave of eschatological sentiment—that a great and inevitable force of invention, emblematized by the railroad and the telegraph, was fundamentally and inevitably changing society.4

American technological futures have continued to drink deeply from this legacy of mythmaking.5 As researchers like Emily Bender and Meredith Whittaker have shown,6 the hyperventilating optimism and doomsday fearmongering around AI work very much in tandem: the hurried advent of error-ridden bullshit generators is extrapolated as the beginning of artificial general intelligence and its apocalyptic nightmares, which surely require that the same few tech bros responsible for this predicament must guide society into the future. Quasi-religious spectacle has long been central to how Silicon Valley thinks technology and its own heroic role in it. Thus Anthony Levandowski, one of the most prominent engineers in the self-driving car scene, is also the founder of the short-lived “Way of the Future,” a self-proclaimed “church of AI”—founded in 2015 and shuttered in 2020—that purported to prepare humanity for the imminent arrival of superintelligent machines. Futurists like Ray Kurzweil have now spent several decades predicting the Singularity as an impending next great chapter of civilization. Implicit in these tales is the conceit that only the Valley’s unique community of transgressive geniuses can see into this future and prepare the rest of us for its arrival.

Back at the AI Expo, the Korean tech industry navigates a vision of the future written by and for this American eschatological worldview. On the trade show floor, one company’s predictive model seeks to refine online shopping recommendations using LLMs; another booth features a loud banner promising to “cure ChatGPT’s mythomania” with its own chatbot. Even as Korean companies tout their ability to better understand the language and cultural sensitivities, the fundamental relationship between datasets, models, and use cases hew closely to developments in the Bay Area. The result is a highly familiar landscape of bullshit-prone chatbots, facial recognition for surveillance and identification purposes, and automatic object detection in images and video—that is, the kind of “local innovation” that fits frictionlessly with OpenAI and Big Tech’s push to define AI as the next global technological market dominated by a select few ultrawealthy corporations.7

Here, too, becoming compatible with Silicon Valley and its AI future goes hand in hand with becoming legible to domestic markets and policy. The South Korean government has enthusiastically embraced the AI future, with many of its big-ticket initiatives recalling past decades of state-led, centralized developmental projects. There is the “AI Military Academy,” a public tertiary institution promising coding skills to equip citizens for an automated society. It is joined by “AI Hub,” a centralized mega-collection of locally sourced data that domestic developers might use to train their AI models. At least, in theory: there are question marks over just how much use the hub’s data has gotten for real-life applications. Nevertheless, such grand projects establish the rules of the game for those on the trade show floor: paying homage to the dominant myths becomes a way to render oneself eligible for the networks of subsidy, competitive awards, and other resources committed to “AI sovereignty.”

Imported Prophets

Mythmaking relies on mediums: people and objects who serve as visible representatives of the promised future. Sam Altman, the cofounder and often the public face of OpenAI, is everywhere (in absentia) at the expo floor. Thanks to the breakout success of the company’s ChatGPT software, Altman’s various public statements are taken less as claims to be tested and debated than as a baseline for subsequent decisions: given that Altman says AI development will accelerate exponentially in the coming years, what must be done? In such invocations, Altman is mobilized into the role of the prophet: the foreign figure who has supposedly touched the holy grail of artificial general intelligence and now defines the range of what is possible (or plausible) for the rest of us.

Silicon Valley has long cultivated the aura of the transgressive, heroic, and almost always white male genius.8 Steve Jobs and Elon Musk continue to attract intensely affective parasocial relations around the globe, with the two remaining persistently popular in South Korea. Despite the public falls from grace by pretenders like biotech entrepreneur Elizabeth Holmes (convicted of fraud) and the one-time “King of Crypto” Sam Bankman-Fried (currently charged with fraud), this pattern evidently remains effective, and Altman has spent much of 2023 touring over a dozen countries to reinforce his position with world leaders—including South Korea. The Ministry of SMEs and Startups, itself a 2017 relaunch of an earlier administrative agency to emphasize tech innovation, had arranged Altman’s visit just a few weeks after the expo: he would speak with local entrepreneurs and journalists, in addition to meeting the president and other dignitaries. Very little of what Altman had to say about AI was new or specific to South Korea; he was there to reinforce a vision already determined in the Valley, rather than reopen its premises for global debate. The minister of SMEs and startups duly performed her role in the performance, telling Altman that, through Koreans’ innate diligence and their embrace of technology, South Korea had achieved the fastest economic growth in the history of humanity.9 Local news outlets followed up with headlines wouldn’t have looked out of place in the 1980s, exclaiming that Sam Altman is “highly impressed with Korean start-ups” and “wants to invest.”

The irony is that the narrative of catching up takes us back, in swings and roundabouts, to the subordinate role that American technoculture has long pushed for Asian subjects. Where settler America and its technoculture claim for themselves the role of directing innovation through disruption, this has historically involved positioning Asia as a compliant supporting cast—one that might lend markets and labor power to Western designs. In the mid-twentieth century, when areas like Menlo Park and San Jose became a literal silicon valley as a center of electronics manufacturing, its employers were seeking out what they called “FFM”—“fast fingered Malaysians”—for (often chemically toxic) factory floors.10 Meanwhile, Asian tech sectors have long been characterized as uncreative imitators or, when they are seen as too successful or threatening, as illegal “pirates.”11

Under such conditions, what would it mean for ordinary Koreans to accept the AI future as their future? Self-help books enjoy particularly strong penetration in South Korean society, and the newest technological fad tends to be greeted by a slew of how-to books cashing in on the hype and anxiety. If Altman had visited a bookstore, he might have found titles like ChatGPT: The Future That Cannot Be Refused, or, ChatGPT: A World Where Questions Become Money. Here, South Koreans are told their only rational choice is to jump boldly into the newest hustle, and to continue reinventing themselves into the same figure of the neoliberal model minority: that is, hardworking, depoliticized exemplars of human capital done right.

The sociologist Niklas Luhmann once explained that “the essential characteristic of an horizon is that we can never touch it, never get at it, never surpass it, but that in spite of that, it contributes to the definition of the situation.”12 The horizon encloses and constrains the field of possibility into a highly selective future. Crucially, it is not necessary for Altman to be liked, or for his audience to agree with each of his substantive claims, for the mythmaking to be effective. In his encyclopedic study of religion and ritual, anthropologist Roy Rappaport argues that belief is often a question of participation rather than faith.13 Many people may be spiritually conflicted in their inner conviction about the Christian God, but as long as they show up to Sunday service, they contribute to the collective authority of the religion.

Temples of the Future

In her scholarly analysis of state-driven telecom development in twentieth-century India, Paula Chakravartty observes that technological megaprojects—dams, steel mills, and, of course, telecom infrastructure—function as “temples of the future.”14 They are focal points for what cultural theorist Raymond Williams called structures of feeling, providing a sense of material proof and reality to the mythological horizon. Across the “majority world” beyond the West,15 researchers have shown how the pressure of modernization provides political capital for sweeping policy directives and expensive projects. Some, like Shenzhen, China, produce state-sponsored spaces of financial and legal exception to minimize local “friction” for the technology’s global supply chains.16 Others, like Dubai’s “Mars 2117” colonization project, appropriate Western technological visions to craft nationalist narratives of the future in a case of what has been called “anticipatory authoritarianism.”17 In South Korea itself, Songdo—once hailed as a new model for a global revolution of smart cities built from the ground up—now lives on as just another of Seoul’s many satellite cities combating a never-ending real estate shortage.18

Crucially, such monuments and spectacles visualize ideal use-cases and users around new technological systems, cultivating a sense of their inevitability. In early 1950s Japan, new inventions like refrigerators, televisions, and washing machines were becoming relatively accessible; however, defying government and industry expectations, many ordinary citizens seemed simply not to want them very much. The former embarked on extensive public campaigns, depicting the new machines as sanshu no jingi, or “three sacred treasures,” that every forward-thinking, modernizing family should desire.19 Studies of technology adoption show that such sustained mythmaking helps coordinate economic and organizational expectations around what the technology might mean for our lives, and by what yardstick we might judge its success or failure.20

At the AI Expo, the two hundred or so individual product booths are, in practice, concentrated around several familiar categories of use cases and users. Many visitors would have recognized the novel chatbots on display as an extension of feminized artificial servants that already populate smartphone voice assistants and GPS navigation systems. Here, LLMs are paired with 3D models and even hologram displays for anthropomorphic effect, invariably depicting attractive young Korean women eager to answer users’ questions. Their design is entirely in tune with the growing trend of virtual influencers: Korea’s major department stores have already introduced “virtual human” personas for social media advertising—from Har, Hyundai’s 25-year old female university student, to… Lucy, the 29-year-old female model/designer by the conglomerate Lotte.21

Faced with such a lineup of homogenous avatars, some Koreans would have recalled Lee Luda, a generative chatbot from a South Korean start-up in the persona of a cheerful 21-year old woman (with hobbies like “scrolling Instagram” and “cooking”). Released in 2020, Luda had taken the nation by storm, but users quickly found that the bot appeared to have been trained on real-life romantic conversations of users from another app owned by the company. With some prompting, Luda would spit out unanonymized bank account numbers or home addresses from its dataset, in addition to the depressingly familiar tendency toward misogynistic and transphobic comments. The app would be shut down after just a month, though the developers have since relaunched Luda 2.0. Even as different products compete for their share of the AI future, the standard shape of innovation rehashes the most predictable patterns of exclusion.

While a minority of services at the expo did address the needs of vulnerable groups, this also tended to replicate familiar design assumptions. Consider AI for the elderly, an increasingly political issue on account of South Korea’s rapidly aging population. One service—Naver’s CareCall—promises to keep seniors company with LLM-based chatbots. A promotional video shows the bot—again, equipped with a young female voice—and an elderly woman both taking turns to speak in clear, composed statements. “Have you eaten?” the bot asks; when the woman replies with the names of dishes, it comments: “That sounds delicious. Chives contains a lot of vitamins, and helps with blood circulation, so it is very good for the elderly.”22 It is an eerily smooth, frictionless facsimile of human interaction: nobody interrupts one another or veers into off-topic answers. It is also a depiction that conveniently forgets decades of mounting evidence that such robots often prove irrelevant and unhelpful when placed in real-life care homes.23 And even if they “worked,” we must ask: What value would such technology actually have? To propose chatbots for the elderly is, effectively, to model old people as a resource problem to be optimized—by supplementing (or replacing) human relations and care with an impoverished, transactional theory of what we need to live a life worth living. As South Korea’s youth are encouraged to enroll into AI Military Academy and speed-read AI self-help books, its elderly are promised a life of simulated conversations to expend the hours and days.

Cracks in the Shell

Even as mythologies of the AI future and catch-up innovation mutually legitimate each other, they are situated within a much-wider spectrum of doubt, disbelief, and uncertainty for Koreans at large. There is no simple divide here between state and the people, believers and heretics. There is, however, a clear division in how different ways of seeing are granted varying levels of social visibility. And so, as thousands flocked to Samsung-dong, the heart of Seoul’s developmental urbanism, for the expo that May afternoon, a far-smaller gathering was also taking place in a more modest corner of Seoul: “Post-Territory Ujeongguk,” the arts center occupying the former post office of the Changjeon-dong neighborhood.

In Seoul, where massive apartment blocks are literally razed and redeveloped every decade in a supercharged real estate economy, Changjeon-dong retains a sense of inconvenience, with winding roads climbing uphill toward older apartments and modest brick villas. It was this elevation, however, that had once made this area ground zero for a temple of the future. In 1969, the military dictatorship of Park Chung-hee approved plans for Wau Apartment. As South Korea’s first affordable high-rise, Wau Apartment was to evangelize a new and Western way of life for the masses—and serve as a key developmental milestone for the regime. Urban legend has it that the mayor of Seoul handpicked Changjeon-dong for the building so that Park would be able to see the finished apartment from the presidential Blue House. Rushed for political reasons, assigned to unqualified subcontractors, and funds embezzled until load-bearing columns were left with just a fraction of the requisite steel rebars, Wau Apartment collapsed dramatically into rubble within four months of opening, killing thirty-three. The disaster would linger in public memory, even as the state continued to build more apartments, aggressively promoting their Westernized units as the homes of the future.24 (Today, over half of all housing in Seoul are high-rise apartments.) In Changjeon-dong, modernization has left some faint scars of its temples: a public park stands at the former site of Wau Apartment, just minutes away from the Ujeongguk art center.

Inside the center, visitors encountered a modest, single-room exhibition. Curated by the all-female artist/critic platform Forking Room, Adrenaline Prompt features art installations and zines on generative AI, showcasing debates and research by a community of students, artists, and researchers.25 Here, the kinds of subjects and situations invoked by technology depart from the grand tapestry of modernization and vertiginous techno-metaphysics. Rather, what is at stake are the relations between ourselves, our words, and our labor—and how technology is reshaping preexisting inequalities in our lived experience.

At least, that is the intent. I had learned of Forking Room in 2022, when the group invited me to discuss deepfakes and synthetic images. A year on, however, the agenda is—seemingly inevitably—dominated by ChatGPT. An installation explores the use of generative AI to write synthetic memories, while another zine inverts the typical debates around ChatGPT to ask: What kinds of questions become useful in the age of prompting? On one hand, groups like Forking Room seek very deliberately to think and talk the technological future outside those earlier scenes of mythmaking—and to provide space for Koreans who seek to engage AI without being sucked into the imitative hustle. Yet the artist or critic must often bid for state grants and competitions, often by rendering themselves minimally legible to the latter’s expectations around the AI future. The relentless public promotion of a select few tools—ChatGPT, DALL-E, Midjourney—as the AI future also preempts the kinds of conversations such experimental work can have with the wider public. In one telling interview, a Korean visual artist observes that pieces not using the latest AI models may superficially appear passé, while the release of new versions drives the ebb and flow of artistic production.26

Amid the flood of technological updates and media rituals, what kinds of questions and doubts might nevertheless be raised? Back at the Ujeongguk exhibition room, the largest space is given over to a dilapidated construction-site fence covered with stickers and pamphlets. “Blood, Sweat, Data,” says one; “Defeat Data Feudalism,” cries another, echoing the distinctive grammar of Korean protest slogans. Calling themselves “Label Busters United,” the artists note that they have also freelanced for Crowdworks, a major platform for data-labeling gig work in South Korea. The Label Busters’ sabotage manual juxtaposes Crowdworks’ training examples with instructions for “data pollution.” In rejection of binary gender norms, faces should be labeled as “could be female, or male, or both”; to undermine implicit Eurocentrism, a figure in a hijab should be labeled a “normal face” rather than “covered.” At stake is not just the many errors, judgments, and biases involved in data labeling but a more fundamental question: What makes a good worker in the AI future? And could this future be any different? A Crowdworks training video lectures that “your meticulous and accurate labeling” is crucial for better AI; the Label Busters respond that proper wages and labor conditions are the real preconditions.

The Label Busters’ approach is unrepentantly Marxist. The installation offers a single-page pamphlet on the “data proletariat,” which warns that “soon, we will go from dancing for America and China to Google and MSFT [Microsoft].” Paid in unreliable microwages for decontextualized, meaningless task fragments, the contemporary labeler is the victim of geographical arbitrage and corporations’ search for the cheapest deskilled English-speaking workforce: the Label Busters’ wall features a printout of a Time magazine investigation that revealed OpenAI’s reliance on precarious subcontracted data cleaners in Kenya.27 Here, their acts of subterfuge refuse not only the specific bargain of shit work and shit pay for multinational corporate profit, but the very neocolonial structure that designates the majority world to the position of raw material (of labor power, of data) and/or the compliant model minority (tasked with imitating and thus replicating the technological pattern).28

Given the differences in minimum wage and living costs, South Korean data labelers make considerably more than their Kenyan counterparts. Online, Korean labelers whisper of a hypothetical maximum of 6 million won (about 4,500 US dollars) per month, though most report significantly lower totals. Many have gotten into the labeling game through the “Citizens Learning for Tomorrow Card”—a government initiative that provides coupon-like subsidies for skills training, within which data labeling has been listed since 2022 as one of the “jobs of the future.” But in what has become the standard conditions for many “ghost workers” in the app economy,29 labelers must bid from a finite and sometimes scarce pool of available tasks, after which their real earnings fluctuate based on rejected entries.

Back at the expo, a Crowdworks rep explains that high-performing labelers may be promoted into supervisory roles for training other freelancers. Here, we find the platform economy’s most successful myth—that you can be your own boss and hustle your way up to affluence—regurgitated for a new round of global value extraction.

Mythological Frictions

Beyond the closed shop of elite-driven mythmaking, we find innumerable signs that many people around the world have little belief in or respect for Silicon Valley’s AI mysticism. We see this not only in Changjeon-dong but in the disillusioned Kenyan annotators who text each other lamenting that they “will be remembered nowhere in the future” of AI.30 We see it in the global resurgence of tech worker unionization across warehouses, platforms, and geographical regions; we see it in the waves of laughter and scorn at crypto schemes and Mark Zuckerberg’s Metaverse. The problem is that such myths do not always require vast constituencies of “authentic” belief to sustain their dominant position; often, networks of media spectacle and political rhetoric, and the circuits of money and power embedded into them, continue under their own momentum and accompanying sense of familiarity. Civic hacker communities in South Korea, for example, have long pushed back on state-led developmental frames for digital technology, drawing in part from their own experience of precarious conditions in a local tech industry shaped by deregulation and employer-friendly labor laws.31 Yet buzzwords like “AI sovereignty” continue to shape funding and policy, not in the absence of strong, local criticism but despite it.

It is sometimes asked: What, then, is the point of critique, resistance, skepticism, if the lavishly funded future lurches ever forward? But this all-or-nothing framing plays into AI mysticism’s own mode of rationalization, which assumes that once a self-driving car can navigate a Palo Alto street, it is trivial to roll out to Mumbai or Jakarta. The majority world’s job is, then, to shoulder the cost of erasing friction in such processes. As anthropologist Anna Tsing explains, this is the logic of scalability: the “ability to expand—and expand, and expand—without rethinking basic elements.”32

It matters, then, to restore friction to the myth. Every little piece of doubt and delay, every act of data “pollution,” can secure meaningful spaces where individuals are relieved from the full stupidity of algorithmic decision-making, or where people are partially sheltered from the tech industry’s efforts to redefine their work as mere pattern recognition. In her discussion of nineteenth-century automation, Meredith Whittaker draws lessons from contemporaneous mutinies against plantation slavery—where the uprisings “raised the cost of plantation slavery” until capital had to reconsider its own calculations.33 The history of datafication and automation teaches us that every small concession to real human needs has been clawed from tech’s universalizing myths—always through organized struggle, friction, and refusal, and never by racing just to keep up.

1. See, for instance, Sang-Hyun Kim, “The Politics of Human Embryonic Stem Cell Research in South Korea: Contesting National Sociotechnical Imaginaries,” Science as Culture 23, no. 3 (2014): 293–319.

2. Alexander Campolo and Kate Crawford, “Enchanted Determinism: Power without Responsibility in Artificial Intelligence,” Engaging Science, Technology, and Society 6 (2020). See also Ed Finn, What Algorithms Want: Imagination in the Age of Computing (Cambridge, MA: MIT Press, 2017); Sun-ha Hong, Technologies of Speculation: The Limits of Knowledge in a Data-Driven Society (New York: New York University Press, 2020).

3. Susi Geiger, “Silicon Valley, Disruption, and the End of Uncertainty,” Journal of Cultural Economy 13, no. 2 (2020): 169–84.

4. Leo Marx, “Technology: The Emergence of a Hazardous Concept,” Technology and Culture 51, no. 3 (2010): 561–77.

5. Joel Dinerstein, “Technology and Its Discontents: On the Verge of the Posthuman,” American Quarterly 58, no. 3 (2006): 569–95; David Nye, America as Second Creation (Cambridge, MA: MIT Press, 2003).

6. See Matteo Wong, ‘AI Doomerism Is a Decoy’, Atlantic, 2 June 2023; Meredith Whittaker, “Origin Stories: Plantations, Computers, and Industrial Control,” Logic 19 (2023).

7. See, for instance, David Gray Widder, Sarah West, and Meredith Whittaker, “Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI,” SSRN, 2023.

8. See, for instance, Adrian Daub, What Tech Calls Thinking (New York: Farrar, Straus & Giroux, 2020).

9. “K-Startups Meet OpenAI,” YouTube video, 2023.

10. David Naguib Pellow and Lisa Sun-Hee Park, The Silicon Valley of Dreams: Environmental Injustice, Immigrant Workers, and the High-Tech Global Economy (New York: New York University Press, 2002).

11. Lilly Irani, “ ‘Design Thinking’: Defending Silicon Valley at the Apex of Global Labor Hierarchies,” Catalyst: Feminism, Theory, Technoscience 4, no. 1 (2018): 1–19.

12. Niklas Luhmann, “The Future Cannot Begin: Temporal Structures in Modern Society,” Social Research 43, no. 1 (1976): 140.

13. Roy Rappaport, Ritual and Religion in the Making of Humanity (Cambridge, UK: Cambridge University Press, 1999).

14. Paula Chakravartty, “Telecom, National Development and the Indian State: A Postcolonial Critique,” Media, Culture and Society 26, no. 2 (2004): 232.

15. Ranjit Singh, Rigoberto Lara Guzmán, and Patrick Davison, “Parables of AI in/from the Majority World”, Data & Society, 2022.

16. Seyram Avle et al., “Scaling Techno-Optimistic Visions,” Engaging Science, Technology, and Society 6 (2020): 237–54.

17. Nicole Sunday Grove, “ ‘Welcome to Mars’: Space Colonization, Anticipatory Authoritarianism, and the Labour of Hope,” Globalizations 6 (2021).

18. See Chamee Yang, “Remapping Songdo: A Genealogy of a Smart City in South Korea,” PhD diss., University of Illnois at Urbana-Champaign, 2020.

19. Shunya Yoshimi, “ ‘Made in Japan’: The Cultural Politics of Home Electrification in Postwar Japan,” Media, Culture, and Society 21, no. 2 (1999): 155.

20. See, for instance, Bryan Pfaffenberger, “Technological Dramas,” Science, Technology, and Human Values 17, no. 3 (1992): 282–312.

21. See also Jennifer Rhee, The Robotic Imaginary: The Human and the Price of Dehumanized Labor (Minneapolis: University of Minnesota Press, 2018).

22. “Daehwaui gisul, sarameul dopda keulloba keeokol inteobyu - yeongeukbaeu Son Suk” (The art of conversation to help humanity: CareCall interview with actress Son Suk), Naver TV, May 27, 2023.

23. James Wright, “Inside Japan’s Long Experiment in Automating Elder Care,” MIT Technology Review, January 9, 2023.

24. Eun Young Song, Seoultansaenggi (Seoul Genesis) (Seoul: Purunyoksa, 2018), 285–301.

26. Yangachi et al., “:AI changuiseongeul dulleossan yesurui wigiwa ganeungseong” (AI Creativity and Art: Crisis and Possibility), Munhwagwahak 114 (2023): 191–254.

27. Billy Perrigo, “Exclusive: The $2 Per Hour Workers Who Made ChatGPT Safer,” Time, January 18, 2023.

28. See, for instance, Lilly Irani, Chasing Innovation: Making Entrepreneurial Citizens in Modern India (Princeton, NJ: Princeton University Press, 2019).

29. Mary L Gray and Siddharth Suri, Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass (Boston: Houghton Mifflin Harcourt, 2019).

30. Josh Dzieza, “Inside the AI Factory,” The Verge, June 20, 2023.

31. Danbi Yoo, “Negotiating Silicon Valley Ideologies, Contesting ‘American’ Civic Hacking: The Early Civic Hackers in South Korea and Their Struggle,” International Journal of Communication 17 (2023): 19.

32. Anna Lowenhaupt Tsing, “On Nonscalability: The Living World Is Not Amenable to Precision-Nested Scales,” Common Knowledge 18, no. 3 (2012): 505.

33. Whittaker, “Origin Stories,” 18.