Machine learning is the practice of training algorithms to classify and predict in order to support decision-making. In recent years, it has skyrocketed in popularity and ubiquity. It’s no stretch to say that most services we use now incorporate machine learning in one way or another. In its pervasiveness, machine learning is becoming infrastructural. And, like all infrastructure, once it matures it will become invisible.

Before that happens, we should develop a way to disrupt it.

A relatively nascent field called “adversarial machine learning” provides a starting point. Described as the intersection of cybersecurity and machine learning, this field studies how these algorithms can be systematically fooled, with or without knowledge of the algorithm itself—an ideal approach, since the specifics of many algorithms are trade secrets. And given the fact that the machine learning regime effectively makes all of us its workers—most of our online activity is in fact labor towards the improvement of these systems—we as individual users have an opportunity to inflict major sabotage.

Consider an early and now-ubiquitous application of machine learning: the everyday spam filter. The job of the spam filter is to categorize an email as either “spam”—junk—or “ham”—non-spam. The simplest case of adversarial machine learning in this context is constructing an email that is spam—a pitch for a pharmaceutical product, for example—but in such a way that the spam filter misclassifies it as ham, thus letting it through to the recipient.

There are a variety of strategies you might employ to accomplish this. A relatively simple one is swapping out the name of “Viagra” for something more obscure to a machine but equally readable to a human: “Vi@gr@”, for example.

Today, most spam filters are resistant to this basic obfuscation attack. But we could consider more sophisticated approaches, such as writing a longer, professional-looking email that hints at the product without ever explicitly mentioning it. The hint may be strikingly obvious to a human, but incomprehensible to a spam filter.

A spam filter is less insidious than many other applications of machine learning, of course. But we can generalize from this example to develop techniques for disrupting other applications more worthy of sabotage.

Poisoning the Well

Most machine learning models are constructed according to the following general procedure:

- Collect training data.

- Run a machine learning algorithm, such as a neural network, over the training data to learn from it.

- Integrate the model into your service.

Many websites collect training data with embedded code that tracks what you do on the internet. This information is supposed to identify your preferences, habits, and other facets of your online and offline activity. The effectiveness of this data collection relies on the assumption that browsing habits are an honest portrayal of an individual.

A simple act of sabotage is to violate this assumption by generating “noise” while browsing. You can do this by opening random links, so that it’s unclear which are the “true” sites you’ve visited—a process automated by Dan Schultz’s Internet Noise project, available at makeinternetnoise.com. Because your data is not only used to make assumptions about you, but about other users with similar browsing patterns, you end up interfering with the algorithm’s conclusions about an entire group of people.

Of course, the effectiveness of this tactic, like all others described here, increases when more people are using it. As the CIA’s Simple Sabotage Field Manual explains, “Acts of simple sabotage, multiplied by thousands of citizens, can be an effective weapon…[wasting] materials, manpower, and time. Occurring on a wide scale, simple sabotage will be a constant and tangible drag on…the enemy.”

Attacks of this sort—where we corrupt the training data of these systems—are known as “poisoning” attacks.

The Pathological and the Perturbed

The other category of adversarial machine learning attacks are known as “evasion.” This strategy targets systems that have already been trained. Rather than trying to corrupt training data, it tries to generate pathological inputs that confuse the model, causing it to generate incorrect results.

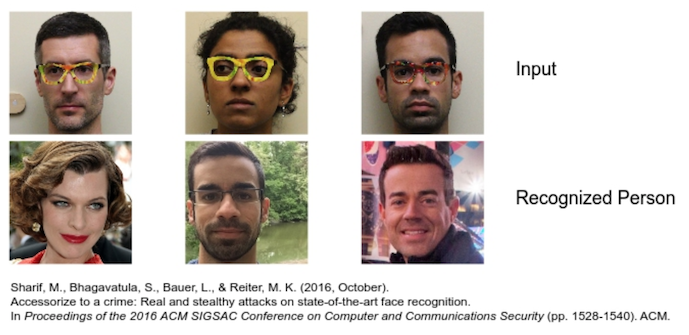

The spam filter attack, where you trick an algorithm into seeing spam as ham, is an example of evasion. Another is “Hyperface,” a collaboration between Hyphen Labs and Adam Harvey, a specially designed scarf engineered to fool facial recognition systems by exploiting the heuristics these systems use to identify faces. Similarly, in a recent study, researchers developed a pair of glasses that consistently cause a state-of-the-art facial recognition system to misclassify faces it would otherwise identify with absolute certainty.

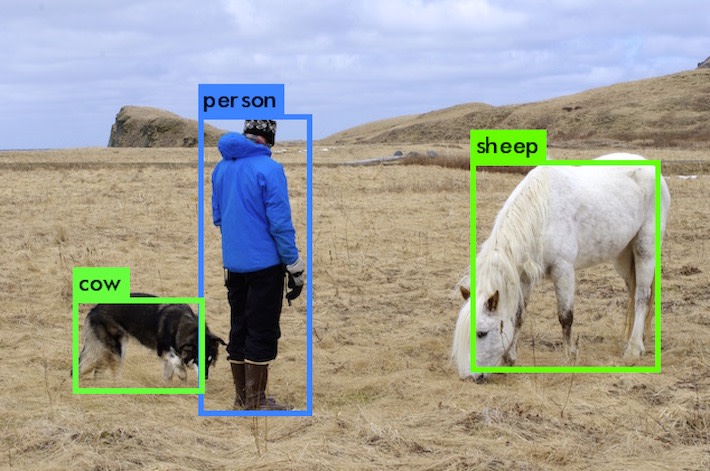

Another domain of computer vision is object recognition. If facial recognition is about recognizing faces, object recognition is about identifying objects. For instance, you might ask an object recognition system to look at a picture of a panda and identify the panda.

Recently, computer science researchers developed a “universal perturbation algorithm” capable of systematically fooling many object recognition algorithms by adding relatively small amounts of noise to the image. This noise is almost imperceptible to a human, but causes the object recognition system to misclassify objects. For example, an image of a coffee maker that has been treated with the universal perturbation algorithm will look like a macaw to the object recognition system.

The writer Evan Calder Williams defines sabotage as:

the impossibly small difference between exceptional failures and business as usual, connected by the fact that the very same properties and tendencies enable either outcome.

Serendipitously, this is the literal mechanic by which the universal perturbation algorithm works. An object recognition neural network, for instance, takes an image and maps it to a point in some abstract space. Different regions of this space correspond to different labels—so an image of a coffee maker ideally maps to the coffee maker region.

But these regions are irregular in form. The categories of macaw and coffee maker may be very similar in certain dimensions. That means it takes only slight nudges—accomplished by introducing the noise into the image—to push a coffee maker image into the macaw region.

One advantage of the perturbation algorithm is that its distortions are invisible to the human eye. Sabotage that sticks out as sabotage is not very successful. Well-executed sabotage leaves doubts not only about its origins but also whether it was deliberate or accidental. That is, it leaves doubts about whether or not it was sabotage at all.

By contrast, if you use Internet Noise, the random browsing that results might be identifiable as such, in which case it may simply be excluded from the data set. This may help you escape the training regime, but it won’t smuggle misinformation into the system to disrupt that regime for others.

If, however, the random browsing was not random but generated according to subtly misleading patterns—like the imperceptible noise in the perturbed images—then the misinformation may make its way into the training data. And if there is enough of it, it will poison the model as a whole.

Protracted People’s War

Research in adversarial machine learning typically falls under the rubric of cybersecurity, so it has some similarities to the field of cryptography. The success of cryptography depends on difficult mathematical operations, such as factoring prime numbers.

These operations are relatively easy for individuals or small organizations to take advantage of, and mostly resilient against adversaries with more resources. The marvel of cryptography is that it is a technology that runs against the typical gradients of power. It favors the less powerful against the more powerful.

Perhaps something similar is at work with adversarial machine learning, favoring the resister against the machine. To quote Ian Goodfellow and Nicolas Papernot, two leading researchers in the field:

On the theoretical side, no one yet knows whether defending against adversarial examples is a theoretically hopeless endeavor ... or if an optimal strategy would give the defender the upper ground.... On the applied side, no one has yet designed a truly powerful defense algorithm that can resist a wide variety of adversarial example attack algorithms.

Like cryptography, the goal of such disruption is not necessarily a single act of sabotage, but rather a distributed undertaking that decreases the cost-effectiveness of data collection at scale. In the case of cryptography, the more people who use encrypted communication applications like Signal, the more expensive state surveillance becomes—hopefully to the point where it becomes infeasible. Similarly, the less accurate these machine learning systems become due to user disruption, the more costly they are, and the less likely they are to be relied upon.

While adversarial machine learning is still in its early years, perhaps it will provide a tactic to complement the parallel struggles on the policy level. We are under no obligation to honestly train these models. If regimes of algorithmic discipline are implemented without our consent, we will need a toolbox of resistance that can directly disrupt their effectiveness.