For the past few months, news outlets have been running stories about Neuralink’s newest feat: the first successful implantation of their “brain chip” in a human recipient. Initial reports were shrouded in mystery. Questions about where (in the brain), what (type of device was used), and in whom (the was device implanted) initially went unanswered, adding to the public’s fascination with the story. What they did share was that the receiving individual could use the implanted device to control a cursor on a computer screen, suggesting it might be connected to the brain regions that control movement. Because the effect of a neural implant varies as a function of its location, the placement of the chip would reveal something of its inner workings.

The patient’s story debuted online in March on X (formerly Twitter), whose owner is the same as that of Neuralink—billionaire Elon Musk. It has been confirmed that the chip was implanted on the motor regions of the brain. Although the recipient was paralyzed from the neck down, they could now play video games simply by thinking about it.

At the heart of this story lies the possibility that technology can be used to allow a person to overcome their physical limitations and do things that their bodies would otherwise be unable to. To fully understand the implications of such capabilities, we must consider this event in a broader historical, technological, and bioethical context.

Is This New?

Though many people were flabbergasted by Neuralink’s news, their advance in this field was more incremental than many are aware.

Neuralink’s brain chip belongs to a well-established and -studied class of technologies known as “brain–computer interfaces,” or BCIs. Not only do FDA-approved BCIs already exist; they are more common than most people expect. At the time of this writing, there are approximately 1.2 million people walking around with a medically implanted BCI. Moreover, while Neuralink enjoys immense popularity on news outlets, it is also not the only player in this field: in addition to many government-funded research labs and institutes around the world, private companies like Advanced Bionics and Blackrock Neurotech have been testing, manufacturing, and selling them for many years.

So, how do these things work? And what can, and cannot, be done with them?

How Brain–Computer Interfaces Work

First, let’s lay down some terminology, as the same technology might be referred to in different and confusing ways. “Brain–computer interface” (or “brain–machine interface”) is an umbrella term for a variety of combinations of hardware and software designed to communicate directly with a person’s brain. Of course, all communication technologies ultimately work by reaching a person’s brain; language itself is, in a sense, an agreed-upon code to induce very specific signals in another person’s brain—signals that represent concepts and objects (cognitive psychologist Chantel Prat calls it the “ultimate brain-to-brain technology”). BCIs, however, are designed to achieve the same result while bypassing the normal sensory and motor channels.

There are many reasons why we might want to do that. Some of them are mostly important for neuroscientists; sending signals to the brain is an excellent way, for example, to test our theories of how the brain might work. But by and large, the main motivation to develop BCIs has been medical: due to accidents or disease, some individuals have lost some of these sensory and motor channels, and, so long as their brain remains functional, a BCI could work as a bridge around the damaged part of the body. A person who is paralyzed, for example, could control a wheelchair simply by thinking, or a blind person could receive images from a camera directly into their brain. Less commonly, BCIs could be used to transmit brain signals to something other than a computer, such as the patient’s own limbs (as in a 2022 study, to bypass a patient’s broken spine), or even to another person’s brain (as in 2018, again to play video games).

While they all solve the common problem of communicating with a person’s brain, BCIs differ widely in terms of the technology they use to do so. Roughly speaking, we can classify these technologies along two axes: whether the device needs to be surgically implanted or not, and whether it listens to information from the brain or transmits information to it.

BCIs that do not need to be implanted are called “non-invasive,” and are built upon technologies that are available in most research universities and hospitals, such as magnetic resonance imaging (MRI) scanners and electroencephalograms (EEGs). In fact, the term “brain–computer interface” was originally coined by researcher Jacques Vidal, who in 1977 demonstrated how brain waves picked by an EEG cap could be processed in real time to let a patient control (yet another) cursor on a screen. (As True Detective’s Rust Cohle said, “Time is a flat circle.”)

While they might not be as flashy or sound as science fictiony as the implanted devices, the fact that they do not require surgical procedures does offer some advantages. For once, this allows much-faster development: most BCI research is, in fact, done with noninvasive means. It might also allow for simpler, portable solutions: a wheelchair that can be controlled with an EEG sensor in the headrest is easier to produce than a wheelchair that requires a connection to an implanted device (neither of these, however, have ever been produced).

The downside is that the brain signals that can be picked up by noninvasive means are coarse. The brain contains 86 billion neurons, all of which convey information, but even the highest-resolution noninvasive recording methods can pick up only an average among millions of them. Trying to decode brain signals with this resolution is possible, but as difficult as trying to decode a football game by interpreting the crowd noises made outside the stadium: you can make out that some things are happening, and, from the location in the stadium, you can make guesses as to which team they are happening to. But it is not going to be very precise.

All the while, there have also been attempts to record signals as closely as possible from individual neurons. This can be done by exploiting the neurons’ most distinctive characteristic: when they exchange chemical messages with each other, neurons change their electric potential, and these changes can be picked up by tiny needle-like electrodes. In order to do so, the electrodes need to be placed next to the cells, and to get there, one must surgically get under the skull.

Invasive BCIs and Neural Prosthetics

In fact, neuroscientists have been using electrodes to record the activity of single neurons for many decades. Because of this, the very first BCIs were surgically implanted: the field started off hardcore. In 1964, Spanish neuroscientist José Delgado implanted a wireless electrode into a bull’s brain and, in an astonishing demonstration, seemed to remote-control the bull’s mood, apparently calming the animal down when the bull was charging—a feat Delgado performed while locked in an arena with the animal. (Neuroscience used to be metal!) More respectably, researcher Eberhard Fetz demonstrated in 1969 that primates with an implanted cerebral electrode could learn to control their neural activity to regulate the output of the electrode (a mechanical needle, in this case, rather than an on-screen cursor). In doing so, Fetz took an investigative tool of neuroscience (an electrode for recording neuronal activity) and turned it into a neural prosthetic, giving primates the ability to control a needle without using their body.

Since then, invasive BCIs have grown in parallel with the development of better and more sophisticated means to record neural activity. Current commercial and research devices might contain thousands of electrodes arranged into arrays of needle-like wires to reach inside neuron populations. Often, each of these wires contains multiple electrodes along its lengths.

These technological improvements have led to the development of tangible products. As noted above, over a million people with implanted devices walk among us—effectively, a small country of cyborgs. Most of them have received cochlear implants to restore hearing (despite the term’s association with hearing, these are brain implants). A sizable number of them are older adults who have received a so-called deep brain stimulator (DBS) to restore compromised motor function. A few hundred more have been implanted with a variety of devices that replace sight. And a few dozen have received experimental implants that connect to artificial robotic arms and legs, which they control through their neural activity.

Although some neural prosthetics are remarkably simple (the simplest DBS devices, for example, are akin to brain pacemakers), most of them require complex software the translates between the external world and the brain (e.g., a cochlear implant that detects sounds and translates them into neural code) or to translate neural signals into actions (e.g., a motor neuroprosthetic that detects the intention of moving an arm and controls a mechanical arm).

Virtually all of these interfaces rely on machine-learning algorithms that learn the code used by the specific population of neurons targeted by the device. A machine-learning algorithm would analyze the incoming data and be trained to detect features—reliable characteristics of neural signals that can be measured and associated with meaningful actions or sensations. The parameters of the algorithm need to be carefully fine-tuned; even when they are implanted in the same brain locations, every device ends up listening to different populations of neurons, and their signals vary significantly across individuals.

These algorithms can become extremely sophisticated. In some of the most amazing demonstrations, such BCIs have been trained to detect extremely complex signals, such as the intention to move a hand in specific directions and the positions of individual fingers in space. In a 2012 episode of 60 minutes, a patient with an implant designed by neuroscientist Andrew Schwartz was able to control a large robotic arm with uncanny precision, shaking hands with the TV host. (In a 2015 interview, Schwartz called the project the “150-million-dollar man.”)

But, despite these successes, this is precisely where things get messy: when devices have to speak the neural code.

The Challenge of Speaking the Brain’s Language

Machine learning can go a long way in picking up meaningful signals from neural data. But if you’re going to stimulate the brain, it is essential to have an idea of what you want to say to the neurons. To do this, you need to have a theory of what happens when a set of neurons fire in a particular area, from their cascading effects on other neurons to the end result as perceived by the individual. In the jargon of computational neuroscience, a theory of how a brain circuit works is often called the “forward model.”

In certain cases, the forward model can be extremely straightforward. Delgado’s bull-taming feat succeeded because the brain circuit that controls the bull’s charging behavior lies at the intersection of motor and motivational areas, and regulating it up or down is sufficient to bring about a dramatic change.

A similar case can be made for many deep-brain stimulators. The motor disorders associated with Parkinson’s disease and essential tremor are a side effect of damage to a complex circuit in the middle of the brain known as the basal ganglia. The circuit works through two chains of nuclei that work in opposition; neuroscientists often describe them as a combo of brake and accelerator. Paradoxically, tremors emerge when the brake is too strong; think of a car trying to move when the emergency brake is on, and how it would rock back and forth as the engine tries to push it, and you have an idea of how this could happen. A DBS implant is designed to rev up the engine enough to overcome the brake. While the implant needs to be carefully positioned and its frequency calibrated, it does not require a very detailed model of the circuit. And yet, the improvement such an implant can bring about in a person’s life may be dramatic, as shown in the 2020 documentary I Am Human.

In other domains, however, the lack of a sophisticated model could spell doom for the device.

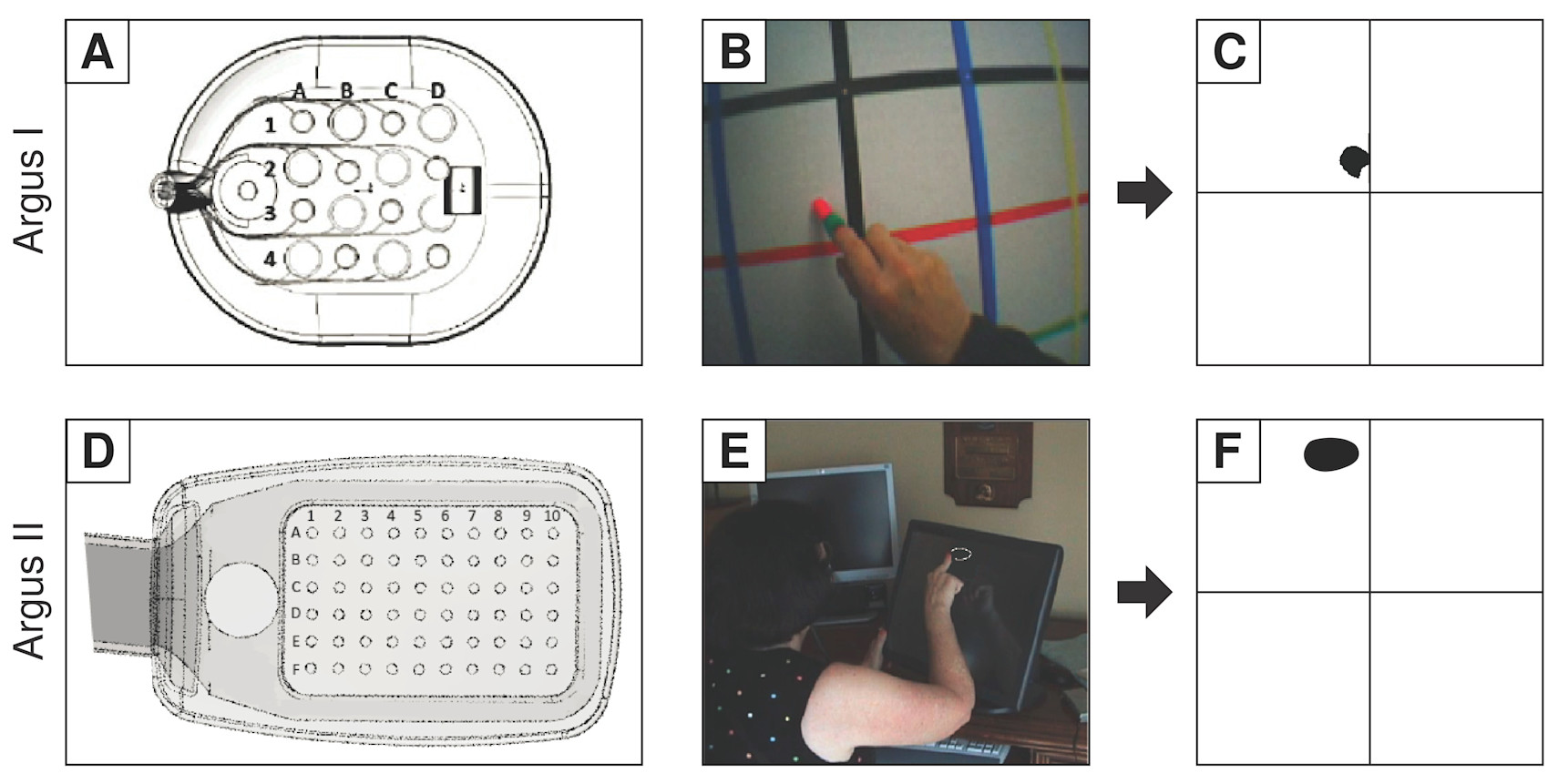

Consider, for example, the case of the retinal prosthetic known as Argus. The device consists of a glass-mounted camera that fed low-resolution images to a small grid of electrodes implanted on a person’s retina—and yes, the retina really is part of the brain.1

The idea behind this prosthetic is intuitive. The retina works a lot like the raster of your camera—that is, the matrix of light-sensitive photoreceptors that corresponds to the image’s pixels. So it makes sense to simply reduce an image to the size of the retinal array, convert it into a numerical matrix of light intensities (a black-and-white image), and use these intensities to stimulate the corresponding locations of the retina.

Although appealing, this approach runs into two problems. The first is that, although neurons do produce electrical currents, they do not really “talk” in terms of electricity: neurons chat with each other in a chemical language, with small and spatially circumscribed releases of proteins. It is these proteins that trigger the chain reaction that leads to a neuron generating current. A neuron can also generate current if electrically (or mechanically) stimulated, but the field generated by an electrode expands in the fluid surrounding the neurons much beyond what a neurochemical signal would do.

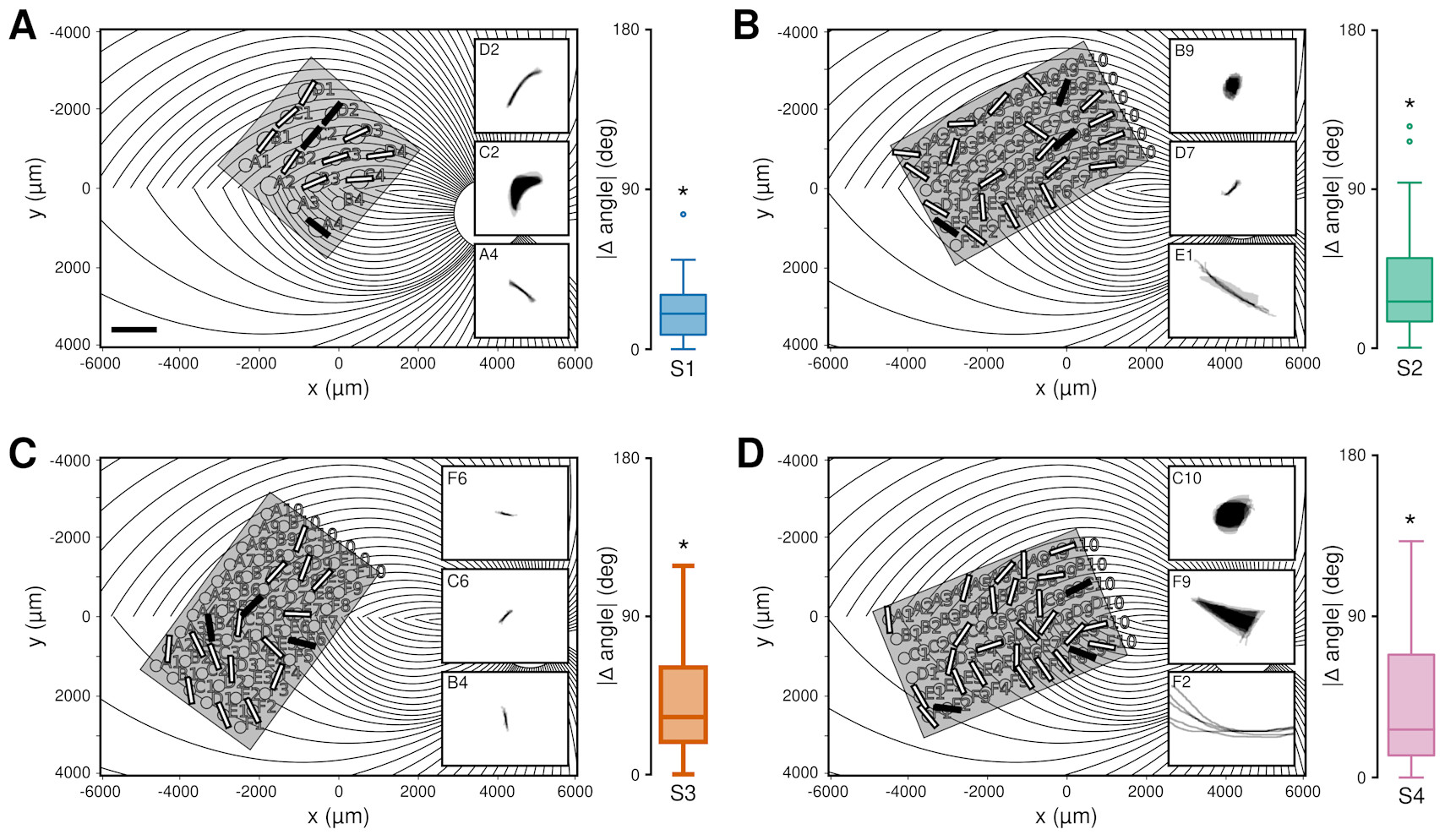

In the case of the retina, the current ends up stimulating the axons—the appendages of neurons that form and carry signals to other neurons and that, in the eye, happen to run in bundles under the retinal cells. Researcher Michael Beyeler of the University of California, Santa Barbara, through careful tests of patients with retinal prosthetics, has simulated the current’s effects on axons, showing that focal stimulation of single arrays would not result in anything similar to the activation of pixels.

On account of this dissimilitude, the current research on vision-restoration prosthetics is now focused on cortical implants—the restoration of vision by transmitting images recorded from a camera directly to the part of the brain that would receive inputs from the retina. This is a surprisingly old technique; neurophysiologist William Dobelle designed one such device in the 1970s, which he finally presented in 2002 as a working prototype: a cortical implant connected to a camera through a carry-on laptop computer. Using this device, one blind individual gave a spectacular demonstration of driving a car—maybe not quite as impressive as controlling a raging bull, but pretty close.

One of the reasons why cortical implants are appealing is that when stimulating the early cortical regions (V1, as it is aptly named), you encounter neither the performance issues nor the potential economic obstacles of retinal implants. In addition, modern digital cameras do a lot of preprocessing (e.g., light and focus adjustment) that are exactly the computations that the eye and the retina perform. So, it should work better, right? To a certain extent, it does—but the problems do not disappear. And this is where we reach the second obstacle: not only do the electrical signals merely approximate neuronal communication, but neurons also have their own way of processing information.

Like modern AIs, neurons work well because they work in groups. The visual system, for example, is made of a very large cascade of neurons that are specialized to detect increasingly complex features at increasingly large resolution—a cascade so large that it is estimated to involve one-third of the whole brain. At none of these levels do neurons behave like pixels on a camera; rather, they all monitor a specific portion of the visual field and detect basic visual elements like a bright spot, a dark spot, a border, or a stripe. Because of this, stimulation of any of the neurons in V1 never corresponds to the illumination of a pixel on an image; it is, instead, like forcing a single neuron to scream, “Hey, there is a spot/border/stripe here!” and pass the message up the chain of command. Researchers Ione Fine and Geoff Boynton, who have developed a realistic computer model of V1, recently simulated what would happen when an image is transmitted through an implanted prosthetic: they found that the perceived image would always be dramatically blurred, to a resolution that is much lower than the density of the electrodes.

Sometimes, the loss of quality might be acceptable. Cochlear implants, for example, do not fully restore hearing, nor do they capture the full quality of sound that healthy ears provide to the brain. Yet, with practice, the brains of patients adapt and learn how to make sense of these signals, extracting a surprisingly large amount of information from these signals. But it is not clear yet whether vision prosthetics could capitalize in the same way on the patient’s brain ability to learn.

These problems only become more daunting when you get into the more ambitious forms of BCI. The parts of the brain responsible for visual perception and motor control are perhaps the best-understood circuits, so they are naturally the most common targets for neuroprosthetics. But the number of medical disabilities resulting from damage to less understood regions of the brain may be greater.

Take, for example, the case of memory loss in Alzheimer’s disease. Wouldn’t it be nice if a neural prosthetic could do what no drug has ever been able to do, and restore damaged memory? Researcher Theodore Berger has been working for years to develop a device that could simulate the function of the hippocampus, the part of the brain that creates memories. Early results in rats were encouraging: the animals could learn simple problems much faster when the device was implanted in that region (a sign that they might remember better), and they could still solve them after the hippocampus had been injured. But we are still far from a true memory prosthetic. For one, scientists continue to debate the exact function of the hippocampus. But, most importantly, all scientists agree that memories are not static: over months and years, memories are recorded and then undergo profound transformations, starting in the hippocampus and ultimately being distributed across large portions of the entire brain. Besides the problem of harnessing such a complex transformation, how large and far reaching should such an implant be?

What Comes after a Brain Implant?

So far, we have focused on the principles by which BCIs and neural prosthetics work, and the limitations they face. But in the case of neural prosthetics, making a device work is only the beginning: after implantation, a device needs to continue working. Mechanical and electrical parts do not interact so nicely with organic tissue; and neural prosthetics do tend to lose their efficacy after a few months or years, as scar tissue starts to form around the electrodes. Maintenance also poses a second problem, that of responsibility: What happens if the manufacturer of the device goes out of business? And what if a model is discontinued? Whereas with a wearable EEG headset, one can simply get a new device, replacing an implanted device is not quite so easy.

But even a device that works flawlessly for decades poses problems. Consider, for example, the issue of data ownership. Intuitively, one would think that data belongs to the individuals whose brains generate it. But medical companies could argue that the data is actually theirs: much as social media companies own the data you generate when using their apps, they own the data you generate when interacting with their devices. This data might be used for ethically acceptable goals, such as improving the function of the device: still, brain data is extremely private information, as it can potentially reveal compromising information about an individual. In a particularly dramatic demonstration, computer scientist Ivan Martinovic and colleagues showed in 2012 that it was possible to decode a person’s credit card PIN from an analysis of the EEG brain waves.

These security and privacy issues are compounded by recent advances in the field. Traditionally, BCIs have been closed systems, interacting with a person’s brain and relying solely on neural signals, but this does not need to be the case. A new generation of DBSs has been proposed, for example, that would calibrate the amount of stimulation based on signals from nearby wireless devices. Such an implant might reduce stimulation until it detects that the patient needs to move, and might rely on signals from a wearable (such as a Fitbit) or from a home security camera to detect an attempt to get up from a chair. This offers the opportunity to making the device much more efficient; at the same time, however, it further erodes the individual’s privacy and increases the number of actors that might have access to the device and its data.

Finally, devices can change an individual’s behavior. The brain is the ultimate site of an individual’s identity, and implanted devices not only have access to it, but the potential to alter it. Consider, again, the case of DBS implants: while they improve motor ability, they also change the way patients process and reach decisions, making them more impulsive.

Neural Prosthetics for Whom?

As of now, BCIs represent a sizable research area: millions of dollars are given out early in government grants; a multitude of research trials have been initiated; many companies invest in research and development; and a growing number of startups emerge every year.

Yet, it is troubling that research and development in this field are almost exclusively driven by an impulse toward pathologization and the curing of disabilities. And many have raised rightful concerns, for example, about the implicit assumption that various forms of disabilities should be treated, as opposed to society making efforts to accommodate them. So why has the use of neuroprosthetics, such as cochlear implants, been normalized? Would a paraplegic individual benefit more from having an implant or from the removal of architectural barriers?

When Elon Musk presented the Neuralink chip in the 2021 live demo, he seemed to imply that the company was focusing on engineering because the neuroscientific issues were simple to deal with. I hope to have convinced you that neuroscientific problems are, in fact, still the hard rock where the engineering wave breaks down—and, perhaps, that there are bigger considerations in play than those of engineering and neuroscience.

1. Incidentally, one of these patients is also featured in I Am Human.