AI as we know it is not only a set of algorithmic methods like deep learning, but a layered arrangement of technologies, institutions, and ideologies that claim to offer solutions to many of society’s trickiest problems. Its offer of generalizing abstraction and action at scale appeals directly to the state apparatus, because bureaucracy and statecraft are founded on the same paradigms. From the state’s point of view, the arguments for adopting AI’s alleged efficiencies at scale become particularly compelling under conditions of austerity where, in the years following the 2008 financial crash, public administrations have been required to deal with increased demand while having their resources cut to the bone. There are more working poor, more children living below the poverty line, more mental health problems, and more deprivation, but social services and civic authorities have had their budgets slashed as politicians choose public service cuts over holding financial institutions to account.

The hope of those in charge is that algorithmic governance will help square the circle between rising demand and diminished resourcing, and in turn distract attention from the fact that austerity means the diversion of wealth from the poorest to the elites. Under austerity, AI’s capacities to rank and classify help to differentiate between “deserving” and “undeserving” welfare recipients and enables a data-driven triage of public services. The shift to algorithmic ordering doesn’t simply automate the system but alters it, without any democratic debate. As the UN’s special rapporteur on extreme poverty and human rights has reported, so-called digital transformation and the shift to algorithmic governance conceals myriad structural changes to the social contract. The digital upgrade of the state means a downgraded safety net for the rest of us.

A case in point is the UK government’s “Transformation Strategy,” which was introduced under the cover of the Brexit turmoil in 2017 and set out that “the inner workings of government itself will be transformed in a push for automation aided by data science and artificial intelligence.” The technical and administrative framing allows even token forms of democratic debate to be bypassed. To narrow down the pool of social benefits claimants, new and intrusive forms of conditionality are introduced that are mediated by digital infrastructures and data analytics. Austerity has already been used as a rationale for ratcheting down social benefits and amplifying the general conditions of precariousness. The addition of automated decision-making adds an algorithmic shock doctrine, where the crisis becomes cover for controversial political shifts that are further obscured by being implemented through code.

These changes are forms of social engineering, with serious consequences. The restructuring of welfare services in recent years, under a financial imperative of reducing public expenditure, not only generated poverty and precarity but prepared the ground for the devastation of the Covid-19 pandemic, in the same way that years of drought precede the ravages of a forest fire. According to a review by the Institute of Health Equity in the UK, a combination of cutbacks to social and health services, privatization, and the poverty-related ill-health of a growing proportion of the population over the decade following the financial crash led directly to the UK having a record level of excess mortality when the pandemic hit.

While the sharp end of welfare sanctions are initially applied to those who are seen as living outside the circuits of inclusion, algorithmically powered changes to the social environment will affect everyone in the long run. The resulting social re-engineering will be marked by AI’s signature of abstraction, distancing, and optimization, and will increasingly determine how we are able to live, or whether we are able to live at all.

AI will be critical to this restructuring because its operations can scale the necessary divisions and differentiations. AI’s core operation of transforming messy complexity into decision boundaries is directly applicable to the inequalities that underpin the capitalist system in general, and austerity in particular. By ignoring our interdependencies and sharpening our differences, AI becomes the automation of former UK prime minister Margaret Thatcher’s mantra that “there is no such thing as society.” While AI is heralded as a futuristic form of productive technology that will bring abundance for all, its methods of helping to decide who gets what, when, and how are actually forms of rationing. Under austerity, AI becomes machinery for the reproduction of scarcity.

Machine Learned Cruelty

AI’s facility for exclusion doesn’t only extend scarcity but, in doing so, triggers a shift towards states of exception. The general idea of a state of exception has been a part of legal thinking since the Roman empire, which allowed the suspension of the law in times of crisis (necessitas legem non habet—“necessity has no law”). It is classically invoked via the declaration of martial law or, in our times, through the creation of legal black holes like that of the Guantanamo Bay detention camp.

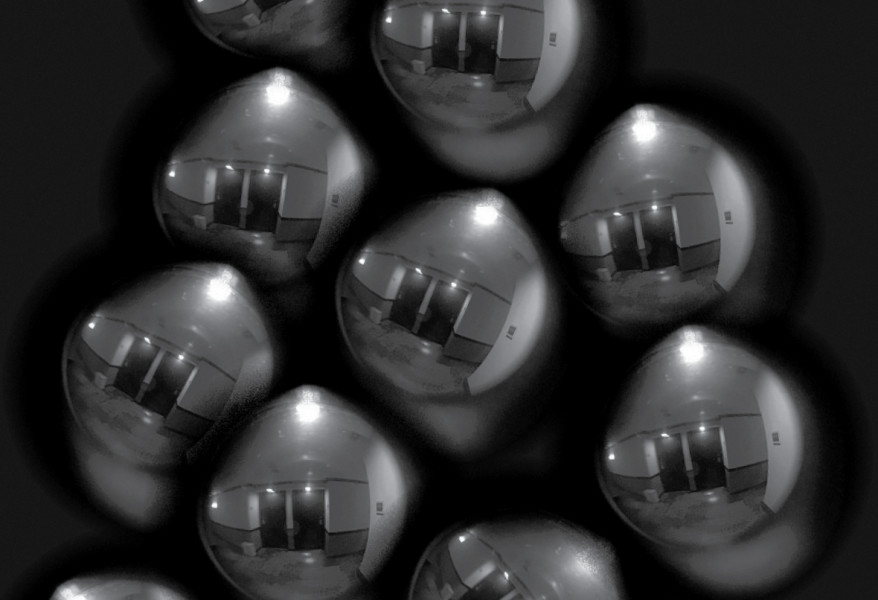

The modern conception of the state of exception was introduced by German philosopher and Nazi Party member Carl Schmitt in the 1920s, who assigned to the sovereign the role of suspending the law in the name of the public good. AI has an inbuilt tendency towards creating partial states of exception through its ability to enforce exclusion while remaining opaque. People’s lives can be impacted simply by crossing some statistical confidence limit, and they may not even know it. AI’s actions of segregating and scarcifying can have the force of the law without being of the law, and will create what we might call “algorithmic states of exception.”

A prototypical example would be a no-fly list, where people are prevented from boarding planes due to unexplained and unchallengeable security criteria. A leaked US government guide of who should be put on a no-fly list says “irrefutable evidence or concrete facts are not necessary,” but “suspicion should be as clear and as fully developed as circumstances permit.” For algorithms, of course, suspicion means correlation. International systems of securitization, such as those implemented by the EU, are increasingly adopting machine learning as part of their mechanics. What AI systems will add to the logic of the no-fly list are computer-aided suspicions based on statistical correlation.

Governments are already implementing fully fledged states of exception for refugees and asylum seekers. Giorgio Agamben uses the term “bare life” to describe the body under the state of exception, stripped of political or civil existence. This is the life of those condemned to spend time in places like the Moria refugee camp in Greece or the Calais Jungle informal settlement in northern France. Meanwhile, asylum seekers in Italy are coerced into “hyper-precarious” situations of legalistic non-existence, ineligible for state subsistence. Under the UK’s “Hostile Environment” regime, people with no recourse to public funds due to their immigration status are charged 150 percent of the actual cost of treatment by the National Health Service, and threatened with deportation if they don’t pay. Incubated under these conditions, AI states of exception will disseminate what the writer Flavia Dzodan calls “machine learned cruelty,” not only at national borders but across the fluctuating boundaries of everyday life.

This diffusion of AI states of exception will come through operations of recursive redlining. Predictive algorithms will produce new and agile forms of personal redlining that are dynamic and updated in real-time. The emergence of AI redlining can be seen in examples like Airbnb’s AI-powered “trait analyzer” software, which risk-scores each reservation before it is confirmed. The algorithms scrape and crawl publicly available information such as social media for anti-social and pro-social behaviors, and returns a rating based on a series of predictive models. Users with excellent Airbnb reviews have been banned for “security reasons,” which seem to be triggered by their patterns of friendship and association, although Airbnb refuses to confirm this.

Airbnb’s patent makes it clear that the score produced by the trait analyzer software is not just based on the individual but on their associations, which are combined into a “person graph database.” Machine learning combines different weighted factors to achieve the final score, where the personality traits being assessed include “badness, anti-social tendencies, goodness, conscientiousness, openness, extraversion, agreeableness, neuroticism, narcissism, Machiavellianism, or psychopathy,” and the behavior traits include “creating a false or misleading online profile, providing false or misleading information to the service provider, involvement with drugs or alcohol, involvement with hate websites or organizations, involvement in sex work, involvement in a crime, involvement in civil litigation, being a known fraudster or scammer, involvement in pornography, or authoring an online content with negative language.”

The enrollment of machine learning in redlining is also visible in the case of the NarxCare database. NarxCare is an analytics platform for doctors and pharmacies in the US to “instantly and automatically identify a patient’s risk of misusing opioids.” It’s an opaque and unaccountable machine learning system that trawls medical and other records to assign patients an Overdose Risk Score. One classic failing of the system has been misinterpreting medication that people had obtained for sick pets; dogs with medical problems are often prescribed opioids and benzodiazepines, and these veterinary prescriptions are made out in the owner’s name. As a result, people with a well-founded need for opioid painkillers for serious conditions like endometriosis have been denied medication by hospitals and by their own doctors.

The problems with these systems go even deeper; past experience of sexual abuse has been used as a predictor of likelihood to become addicted to medication, meaning that subsequent denial of medicines becomes a kind of victim blaming. As with so much of socially applied machine learning, the algorithms simply end up identifying people with complex needs, but in a way that amplifies their abandonment. Many states in the US force doctors and pharmacists to use databases like NarxCare under threat of professional sanction, and data about their prescribing patterns is also analyzed by the system. A supposed harm reduction system based on algorithmic correlations ultimately produces harmful exclusions.

These kinds of systems are just the start. The impact of algorithmic states of exception will be the mobilization of punitive exclusions based on applying arbitrary social and moral determinations at scale. As AI’s partial states of exception become more severe, they will derive their social justification from increased levels of securitization. Securitization is a term used in the field of international relations to label the process by which politicians construct an external threat, allowing the enactment of special measures to deal with the threat. The successful passing of measures that would not normally be socially acceptable comes from the construction of the threat as existential—a threat to the very existence of the society means more or less any response is legitimized.

Securitization “removes the focus on social causation” and “obscures structural factors,” write the scholars David McKendrick and Jo Finch; in other words, it operates with the same disdain for real social dynamics as AI itself. The justifications for AI-powered exceptions amount to securitization because, instead of dealing with the structural causes of social crisis, they will present those who fall on the wrong side of their statistical calculations as some kind of existential threat, whether it’s to the integrity of the platform or to society as a whole.

The Tech to Prison Pipeline

One immediate generator of algorithmic states of exception will be predictive policing. Predictive policing exemplifies many of the aspects of unjust AI. The perils of deploying algorithms to produce the subjects you expect to see, for example, is very clear in a system like ShotSpotter. ShotSpotter consists of microphones fixed to structures every few blocks in areas of cities like Chicago, along with algorithms, including AI, that analyze any sounds like loud bangs to determine if they were a gunshot. A human analyst in a central control room makes the final call as to whether to dispatch police to the scene. Of course, the officers in attendance are primed to expect a person who is armed and has just fired a weapon, and the resulting high-tension encounters have been implicated in incidents such as the police killing of thirteen-year-old Adam Toledo in Chicago’s West Side, where body-cam footage showed him complying with police instructions just before he was shot dead.

ShotSpotter is a vivid example of the sedimentation of inequalities through algorithmic systems, overlaying predictive suspicion onto its deployment in communities of color and resulting, inevitably, in cases of unjust imprisonment. Other predictive policing systems are more in the classic sci-fi mold of films like Minority Report. For example, the widely adopted PredPol system (recently rebranded as Geolitica), came out of models of human foraging developed by anthropologists, and was turned into a predictive system as part of counterinsurgency efforts in Iraq. It was only later that it was used to predict crime in urban areas like Los Angeles.

The cascading effect of securitization and algorithmic states of exception is to expand carcerality—that is, aspects of governance that are prison-like. Carcerality is expanded by AI in both scope and form: its pervasiveness and the vast seas of data on which it feeds extend the reach of carceral effects, while the virtual redlining that occurs inside the algorithms reiterates the historical form by fencing people off from services and opportunities. At the same time, AI contributes to physical carcerality through the algorithmic shackling of bodies in workplaces like Amazon warehouses and through the direct enrollment of predictive policing and other technologies of social control in what the Coalition for Critical Technology calls a “tech to prison pipeline.” The logic of predictive and pre-emptive methods fuses with the existing focus on individualized notions of crime to extend the attribution of criminality to innate attributes of the criminalized population. These combinations of prediction and essentialism not only provide a legitimation for carceral intervention but also constitute the process of producing deviant subjectivities in the first place. AI is carceral not only through its assimilation by the incarcerating agencies of the state but through its operational characteristics.

Culling the Herd

The kind of social divisions that are amplified by AI have been put under the spotlight by Covid-19: the pandemic is a stress test for underlying social unfairness. Scarcification, securitization, states of exception, and increased carcerality accentuate the structures that already make society brittle, and the increasing polarization of both wealth and mortality under the pandemic became a predictor of post-algorithmic society. It’s commonly said that what comes after Covid-19 won’t be the same as what came before, that we have to adapt to a new normal; it’s perhaps less understood how much the new normal will be shaped by the normalizations of neural networks, how much the clinical triage triggered by the virus is figurative of the long-term algorithmic distribution of life chances.

One early warning sign was the way that AI completely failed to live up to its supposed potential as a predictive tool when it came to Covid-19 itself. The early days of the pandemic were a heady time for AI practitioners, as it seemed like a moment where new mechanisms of data-driven insight would show their true mettle. In particular, they hoped to be able to predict who had caught the virus and who, having caught it, would become seriously ill. “I thought, ‘If there’s any time that AI could prove its usefulness, it’s now.’ I had my hopes up,” said one epidemiologist.

Overall, the studies showed that none of the many hundreds of tools that had been developed made any real difference, and that some were in fact potentially harmful. While the authors of the studies attributed the problem to poor datasets and to clashes between the different research standards in the fields of medicine and machine learning, this explanation fails to account for the deeper social dynamics that were made starkly visible by the pandemic response, or the potential for AI to drive and amplify those dynamics.

In the UK, guidelines applied during the first wave of Covid-19 said that patients with autism, mental disorders, or learning disabilities should be considered “frail,” meaning that they would not be given priority for treatment such as ventilators. Some local doctors sent out blanket do-not-resuscitate notices to disabled people. The social shock of the pandemic resurfaced visceral social assumptions about “fitness,” which shaped both policy and individual medical decision-making, and were reflected in the statistics for deaths of disabled people. The UK government’s policy-making breached its duties to disabled people under both its own Equality Act and under the UN Convention on the Rights of Persons with Disabilities. “It’s been extraordinary to see the speed and spread of soft eugenic practices,” said Sara Ryan, an academic from Oxford University. “There are clearly systems being put in place to judge who is and isn’t worthy of treatment.”

At the same time, it became starkly obvious that Black and ethnic minority communities in the UK were hit by a disproportionate number of deaths due to Covid. While initial attempts to explain this reached for genetic determinism and race science tropes, these kinds of health inequities occur primarily because of underlying histories of structural injustice. Social determinants of health, such as race, poverty, and disability, increase the likelihood of pre-existing health conditions, such as chronic lung disease or cardiac issues, which are risk factors for Covid-19; poor housing conditions, such as mold, increase other comorbidities, such as asthma; and people in precarious work may simply be unable to work from home or even afford to self-isolate. So much sickness is itself a form of structural violence, and these social determinants of health are precisely the pressure points that will be further squeezed by AI’s automated extractivism.

By compressing the time axis of mortality and spreading the immediate threat across all social classes, the pandemic made visible the scope of unnecessary deaths deemed acceptable by the state. In terms of deaths that can be directly traced to UK government policies, for example, the casualties of the pandemic can be added to the estimated 120,000 excess deaths linked to the first few years of austerity. The pandemic has cast a coldly revealing light not only on the tattered state of social provision but also on a state strategy that considers certain demographics to be disposable. The public narrative about the pandemic became underpinned by an unspoken commitment to the survival of the fittest, as the deaths of those with “underlying health conditions” were portrayed as regrettable but somehow unavoidable. Given the UK government’s callous blustering about so-called “herd immunity,” it’s unsurprising to read right-wing newspaper commentary claiming that, “from an entirely disinterested economic perspective, the Covid-19 might even prove mildly beneficial in the long term by disproportionately culling elderly dependents.”

Appalling as this may be in itself, it’s important to probe more deeply into the underlying perspective that it draws from. What’s at stake is not simply economic optimization but a deeper social calculus. A deep-seated fear that underlies the acceptability of “culling” your own population is that a frail white population is a drain, one that makes the nation vulnerable to decline and replacement by immigrants from its former colonies. AI is a fellow traveler in this journey of ultranationalist population optimization because of its usefulness as a mechanism of segregation, racialization, and exclusion. After all, the most fundamental decision boundary is between those who can live and those who must be allowed to die.

Predictive algorithms subdivide resources down to the level of the body, identifying some as worthy and others as threats or drains. AI will thus become the form of governance that postcolonial philosopher Achille Mbembe calls necropolitics: the operation of “making live/letting die.” Necropolitics is state power that not only discriminates in allocating support for life but sanctions the operations that allow death. It is the dynamic of organized neglect, where resources such as housing or healthcare are subject to deliberate scarcification and people are made vulnerable to harms that would otherwise be preventable.

The designation of disposability can be applied not only to race but along any decision boundary. Socially applied AI acts necropolitically by accepting structural conditions as a given and projecting the attribute of being suboptimal onto its subjects. Expendability becomes something innate to the individual. The mechanism for enacting this expendability is rooted in the state of exception: AI becomes the connection between mathematical correlation and the idea of the camp as the zone of bare life. In Agamben’s philosophy, the camp is pivotal because it makes the state of exception a permanent territorial feature. The threat of AI states of exception is the computational production of the virtual camp as an ever-present feature in the flow of algorithmic decision-making. As Mbembe says, the camp’s origin is to be found in the project to divide humans: the camp form appears in colonial wars of conquest, in civil wars, under fascist regimes, and now as a sink point for the large-scale movements of refugees and internally displaced people.

The historical logic of the camp is exclusion, expulsion and, in one way or another, a program of elimination. There is a long entanglement here with the mathematics that powers AI, given the roots of regression and correlation in the eugenics of Victorian scientists Francis Galton and Karl Pearson. The very concept of “artificial general intelligence”—the ability for a computer to understand anything a human can—is inseparable from historical efforts to rationalize racial superiority in an era when having the machinery to enforce colonial domination was itself proof of the superiority of those deploying it. What lies in wait for AI is the reuniting of racial superiority and machine learning in a version of machinic eugenics. All it will take is a sufficiently severe social crisis. The pandemic foreshadows the scaling up of a similar state response to climate change, where data-driven decision boundaries will be operationalised as mechanisms of global apartheid.

Occupying AI

The question, then, becomes how to interrupt the sedimentation of fascistic social relations in the operation of our most advanced technologies. The deepest opacity of deep learning doesn’t lie in the billion parameters of the latest models but in the way it obfuscates the inseparability of the observer and the observed, and the fact that we all co-constitute each other in some important sense. The only coherent response to social crisis is, and always has been, mutual aid. If the toxic payload of unconstrained machine learning is the state of exception, its inversion is an apparatus that enacts solidarity.

This isn’t a simple choice but a path of struggle, especially as none of the liberal mechanisms of regulation and reform will support it. Nevertheless, our ambition must stretch beyond the timid idea of AI governance, which accepts a priori what we’re already being subjected to, and instead look to create a transformative technical practice that supports the common good.

We have our own histories to draw on, after all. While neural networks claim a generalizability across problem domains, we inherit a generalized refusal of domination across lines of class, race, and gender. The question is how to assemble this in relation to AI, how to self-constitute in workers’ councils and people’s assemblies in ways that interrupt the iteration of oppression with the recomposition of collective subjects. Our very survival depends on our ability to reconfigure tech as something that adapts to crisis by devolving power to those on the ground closest to the problem. We already have all the computing we need. What remains is how to transform it into a machinery of the commons.