When I was giving birth to my oldest son, the attending told me it would feel like an elephant was stepping on my chest. They made the C-section incision right below my belly. With the high dose of epidural, my whole body shook like I was having a seizure. Then, a few minutes later, it was over, relieved of ten months of pregnancy and the clinical intrusions that left my body feeling like it no longer belonged to me.

I felt a similar sense of relief nine years later, leaving the building located at 6301 12th Avenue in Bay Ridge with my three-year-old Jasyn. Jasyn was originally my nephew but the Administration for Children’s Services (ACS), New York City’s child welfare agency, removed him from his biological mother, my sister-in-law, because she was unable to care for him independently and was offered no meaningful support. Jasyn’s three siblings were also snatched up by ACS and placed with me through a “kinship foster care” arrangement, which means a relative agrees to raise the kids. She used to joke that I had one baby by her brother (my biological son) and four by his sister (her).

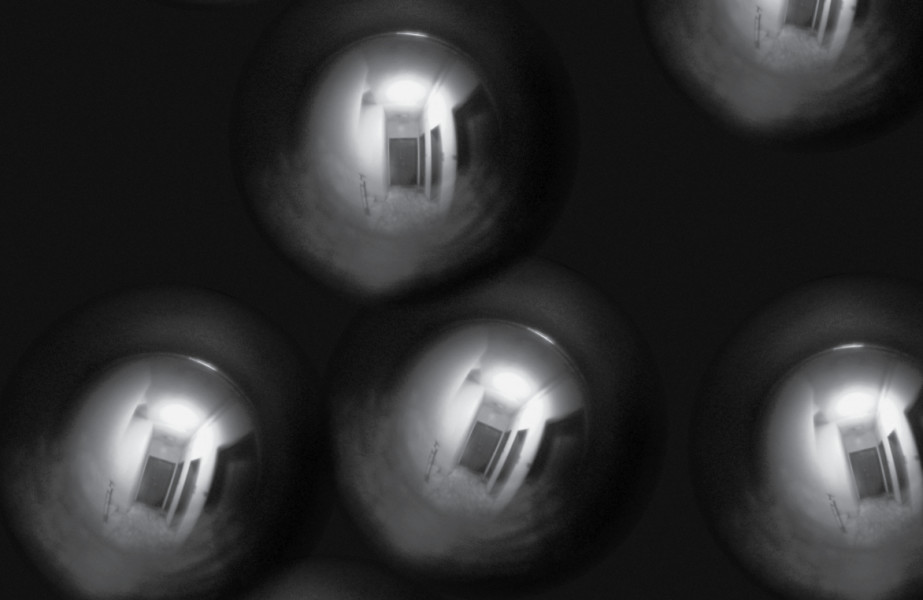

At the time, the Bay Ridge building housed the administration of MercyFirst, a non-profit foster care agency. It was also where parents separated from their children had supervised visits. Historically, the building used to be Angel Guardian Orphanage and retained the foreboding presence, surrounded by high metal fences topped with barbed wire. The otherwise derelict ambience threatened to swallow us whole, but now we were finally outside, birthed through the front gate and onto the empty Bay Ridge sidewalk.

The city government does not directly manage the custody of children removed from their families into foster homes. Rather, it contracts out to nonprofits like MercyFirst for the management, care, and placement of children, under the supervision of ACS. MercyFirst was the agency that oversaw my kinship foster care arrangement with Jasyn. The agency required me to bring Jasyn in for a psychological evaluation. Even though I was annoyed they didn’t allow me to take him to a community clinic, I complied, wanting to just get it over with.

The testing was relatively uneventful and, after an hour, we were finally released back into the world to go home. As I waited for the Uber driver to arrive, I thought about why Jasyn had bit his tongue until he bled during the test. That was unusual for him but I guessed he just felt the weight of the building pressing on his chest as much as I did, and wanted out. The cab pulled up and we walked around the corner to the far side of the building to get in. Jasyn grumbled slightly as I buckled him in. Transitions were always challenging but we had gotten into a rhythm through verbal affirmations and grounding exercises I had learned from his preschool. About five minutes into the cab ride, I got a call from the foster care agency caseworker’s supervisor, Tatiana Nedzelskaya.

First, she said, three caseworkers were standing outside when they saw me viciously beating him into the cab. Then she said, no, they saw me from the window punching him brutally in the face and slamming him into the cab. As she demanded I turn around to bring him back to MercyFirst in order to receive a “body check”—where a caseworker or agency medical staff strip-searches the child down to their underwear to check for marks and bruises—I felt the pressure in my chest making it difficult to breathe. At this point, I was sobbing hysterically trying to process the accusations and Jasyn was screaming, terrified by my display of emotions as I’m typically a rather stoic person. I got it together enough to put the Uber driver on speaker, and he affirmed that we had uneventfully gotten in the cab without any beatings. Tatiana was unmoved, demanding I bring him back immediately.

I hung up on her as I realized this was a setup. A couple weeks earlier, I had filed a formal complaint to ACS, reporting the agency for refusing to finalize the adoption of Jasyn and my other three nieces and nephew without any stated reason. They also refused to allow me to seek private evaluations and services to support their disabilities. For me, adoption meant legally severing our family’s relationship with a family policing system that terrorized us. To be clear, adoption still erroneously conjures the image of do-gooders saving orphaned children in the public imagination, yet it’s made possible through legally severing the ties between a child and their biological parents. We would have pursued guardianship instead if that was an option at the time. But I couldn’t see any other way for us to get out of the system where we were constantly under surveillance.

If I brought Jasyn back to the building, I knew that would be the last time I’d ever see him again. They’d ask to speak to me privately while he was in the exam room and escort him out through another door. It didn’t matter that I didn’t beat him or that the entire story was concocted. While I’d be arguing with them, a call would be made for my other kids to be removed from school on an emergency warrant. I could lose all of them. I couldn’t go back to the agency.

On the Offensive

I began to formulate a plan. I called my squad of babysitters to bring my kids back home right away. From there, we all went to the Weill Cornell Pediatric Emergency Room. I explained the accusations and asked for the kids to receive a full medical exam to confirm that none had marks and bruises on their body. The attending physician managing the ER during this shift requested to speak to each kid privately in order to ask them about their home life and whether they had experienced any abuse. I enthusiastically consented. God bless this woman who, in the middle of rush hour, examined five children with ostensibly no medical emergencies, typed and hand-signed individual letters for each, stating they were in good health, all on the official Weill Cornell letterhead that I knew would legitimize my story in court.

When we got home, the kids collapsed into their beds, exhausted from the emotional stress, after a full day of school no less. I wanted nothing more than to rest but I knew the day was not over. Once a call is made to the New York Statewide Central Register of Child Abuse and Maltreatment—which I assumed Tatiana had done—child protective service agents must respond within twenty-four hours. The knock at the door came at 2:00 AM. If the kids were not in foster care, I would never have opened without a warrant. But I had no legal standing to refuse entry as a foster parent. The kids were contractually the property of New York State and I was just an instrument through which they could supervise their property. In fact, foster parents are the only category of parents legally obligated to open the door to a police officer or a child protective services agent without a warrant. When a foster parent “opens their home” to go through the set of legal processes to become certified to take a foster child, their entire household is subject to policing and surveillance.

So I already knew what time it was as the woman sat at my dining room table asking me a thousand questions and the man wandered around, wordlessly inspecting my house. They both paused to look at me when they noticed I was sitting with a pen, annotating their visit. I made a big show of writing the date and time on the fresh notebook page while I asked them for their full names and why they were here. I knew they wouldn’t give their names or share the details of the accusation. But the performance was critical in the context of family policing, where documentation is a life-or-death matter. It never matters whether you are a good parent or a bad one—the family police look for whether you will roll down and die, or whether you have the skills to catch them slipping.

The agent who remained silent paid close attention as I recounted what happened and how it had been a setup in retaliation for me reporting the foster care agency. The side of the MercyFirst building where Tatiana claimed three caseworkers witnessed me beating Jasyn happened to be completely boarded up, which I proved by opening Google Street View on my laptop and cross-matching it to the Uber receipt showing the exact location of where the cab picked us up. There was no way the caseworkers could’ve seen us on the sidewalk.

The agents’ eyes glazed over. They told me to put the laptop away. For the next forty-five to sixty days, the agency would investigate whether there was credible evidence that I was a neglectful or abusive parent. Still, the man said, “We don’t take kids from apartments that look nice like yours… This whole thing sounds suspect.” Regardless, they insisted on completing the most dreaded aspect of an investigation: waking up the kids for strip searches to check them for bruises. I marched each of them out one at a time into the bathroom, where they had to remove all of their clothes down to their underwear, including the baby. Caseworkers are not physicians and do not have any training to distinguish a bad patch of eczema from an old bruise, so the inspection of a child’s naked body can always result in removal. Fortunately, they went through the motions with minimal commentary and left. I could breathe again, at least for now.

The next day, I wrote a letter outlining how the foster care agency had directly retaliated against me for making a report against them. I attached the Weill Cornell documents and outlined the areas where I believed the agency was committing fraud or abusing kids in care. As the years had rolled on and the agency had fought me on everything from adoption to therapy for the kids, I had begun to dig into their tax documents, research their board members and funders, and dig up any financial relationships I could get my hands on. I made twenty copies of everything, and then had them notarized and sent via certified mail to all my local and state representatives. I had to be on the offensive now or risk losing my kids.

My assemblywoman responded to my letter, and shared it with the commissioner of the city’s Department of Investigation. An inquiry was initiated; I heard through the grapevine that the agency had to go through a full audit. As for the allegation of child abuse, I eventually beat the case and received a letter from the New York Statewide Central Register of Child Abuse and Maltreatment saying that the allegation was unsubstantiated. There’s no innocence in family policing—only “We could prove you’re abusive,” or “We couldn’t prove you’re abusive.” Meanwhile, even sealed unsubstantiated allegations remain on file, to be re-opened whenever there’s a new investigation.

I was financially poor but professionally fortunate to have connections who put me in touch with various family defense clinics in the city to ask for advice about my case. Not a single one was surprised about the false allegations. What they were uniformly shocked about was that the kids hadn’t been snatched up. While what happened to us might seem shocking to middle-class readers, for family policing it is the weather. (Black theorist Christina Sharpe describes antiblackness as climate.) The only aberration of my particular circumstances relative to the everyday operations of the family policing apparatus was that we seemed to elude destruction. Over the next two years, I was able to finalize the adoptions for all of the kids. But I never forgot that the only reason I didn’t lose my family was because I had the resources to make the lives of the people working at the foster care agency a living hell. How many teenage mothers—who were every bit as innocent as me—had they deployed this tactic against and succeeded?

The Digital Poorhouse

Every aspect of interacting with the various institutions that monitored and managed my kids—ACS, the foster care agency, Medicaid clinics—produced new data streams. Diagnoses, whether an appointment was rescheduled, notes on the kids’ appearance and behavior, and my perceived compliance with the clinician’s directives were gathered and circulated through a series of state and municipal data warehouses. And this data was being used as input by machine learning models automating service allocation or claiming to predict the likelihood of child abuse. But how interactions with government services are narrated into data categories is inherently subjective as well as contingent on which groups of people are driven to access social services through government networks of bureaucratic control and surveillance. Documentation and data collection was not something that existed outside of analog, obscene forms of violence, like having your kids torn away. Rather, it’s deeply tied to real-life harm.

I had no pre-existing interest in the banal details of data collection, but when I read the political scientist Virginia Eubanks’ Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor in 2018, I finally had a framework to understand how our experience was so deeply driven by, and entangled with, the production of data. Eubanks’ notion of the “digital poorhouse”—a complex set of computational geographies for disciplining poor people—was viscerally real for me.

One of the case studies in Eubanks’ book examining public sector adoption of automated decision making is Allegheny Family Screening Tool (AFST), a predictive risk model used by Allegheny Department of Child Services to screen calls accusing parents of child abuse. The software attempts to predict the risk of child abuse in order to target high-risk households with preventive interventions. So, how is that risk calculated? Utilization of social services is one factor. For example, if the mother has accessed mental health resources through a Medicaid-funded clinic, she would be perceived by the algorithm to be a mother with a history of mental illness. Yet if the same parent accessed (more expensive) private mental health services—which are not contractually mandated to report medical records to the state—they would be labeled by the algorithm as a mother without mental illness. Disability doesn’t inherently make someone unable to parent, but being marked as a disabled parent activates a suspicion about parental competence.

On the surface, an examination of the datasets produced through interactions with social services might appear to tell you something about the people who rely on them. In fact, that is a core assumption of predictive risk modeling tools like AFST, which don’t, for example, see data about the frequency with which child welfare agencies beat, abuse, and kill children. The dominant narrative about child welfare is that it is a benevolent system that cares for the most vulnerable. The way data is correlated and named reflects this assumption. But this process of meaning making is highly subjective and contingent. Similar to the term “artificial intelligence,” the altruistic veneer of “child welfare system” is highly effective marketing rather than a description of a concrete set of functions with a mission gone awry.

Child welfare is actually family policing. What AFST presents as the objective determinations of a de-biased system operating above the lowly prejudices of human caseworkers are just technical translations of long-standing convictions about Black pathology. Further, the process of data extraction and analysis produce truths that justify the broader child welfare apparatus of which it is a part. In her article “The Steep Cost of Capture,” Meredith Whittaker explains how “Tech firms are startlingly well positioned to shape what we do—and do not—know about AI and the business behind it, at the same time that their AI products are working to shape our lives and institutions.” Likewise, family policing agencies are startlingly well positioned to shape (and conceal) what we know about how they operate and the technical infrastructures they produce.

The carceral nature of family policing becomes clearer when one considers which families it targets. As the scholar Dorothy Roberts explains in her 2022 book Torn Apart, an astonishing 53 percent of all Black families in the United States have been investigated by family policing agencies. Roberts further highlights how the majority of children separated from their families are for reasons of poverty, which the government criminalizes through the category of neglect. An even closer examination of these facts reveals that impoverished African American families have almost all experienced family policing and have some of the highest rates of removal—only comparable to Indigenous children in certain states.

The production of administrative data is a mechanism through which family policing agencies regulate and manage where poor people are allowed to go and with whom. If you have an “indicated” case (the equivalent of “guilty”) in any of the statewide central registries, you can’t work in a daycare or a school. If you can’t find work in the formal economy because you’re criminalized through the registry, you’re going to have a hard time gaining eligibility for government vouchers subsidizing child care. If you catch a felony due to an allegation of abuse or neglect, you can’t live in public housing. Even if someone else in your household has a felony and you live in public housing, you have to cast them out. If you want to leave an abusive relationship but have an indicated case, your options to leave are often limited to the domestic violence shelter (which is run by the family police) where you have a high risk of losing your child. If your daughter asks you to watch your grandchild while she goes to work and her partner makes a vengeful allegation against her, she can now lose custody of her child for leaving them with you.

Despite its parallels to and partnerships with traditional policing, family courts treat social services as exempt from the constitutional guardrails. In practice, this means that people on the receiving end have no Fourth Amendment protections from unreasonable search and seizure, are not read their Miranda rights, and have no guarantee of an attorney being appointed to them at the onset of an investigation. Meanwhile, caseworkers and those who make allegations of child abuse to the statewide central registries receive qualified immunity. The volume of administrative data produced by family policing reflects this depth of intergenerational intrusion within the domestic lives of Black families. Even unfounded cases are reopened at the onset of new investigations, so for many families there’s no escape from the legacy of family policing. This data, in turn, drives predictive risk models which aim to extend the reach of family policing agencies.

The leaders of child welfare agencies often situate predictive risk models as a mechanism to move the decision making away from biased front-line caseworkers, toward the scientific and rational decision making of an algorithmic system. These caseworkers are often seen as the source of contaminated data—in no small part because they tend to be predominantly Black and working-class themselves. In 2021, Emily Putnam-Hornstein, a co-developer of the AFST software in Pennsylvania, co-authored a report published by the American Enterprise Institute, a right-wing think tank, arguing that the high rates of surveillance and separation of Black families is due to the higher level of dysfunction and child abuse within Black families, which they explictly attribute to the legacy of slavery and segregation. But there’s no evidence to support Black families are more abusive than other racial groups. In fact, the subjects of the original Kaiser Permanente study on “adverse childhood experiences”—widely touted by proponents of family policing as a scientific justification for intervening in the lives of poor Black people—were nearly 80 percent white and almost half college graduates. The study was novel for revealing how much white adults are hurting and are in need of support. Yet instead of attending to that, it’s been weaponized against impoverished Black families.

It’s a Set-up, Kids!

When did New York City’s child welfare agency begin implementing predictive risk modeling? ACS is notoriously opaque, and highly resistant to public records requests. So it’s impossible to put together the complete story of how the agency implemented predictive risk modeling. But the broad contours can be highlighted by examining the turn toward automated decision-making following the resignation of former ACS commissioner Gladys Carrión in late 2016. In May 2016, during a panel at the White House’s “foster care hackathon,” DJ Patil, the chief data scientist for the Obama White House asked Carrión what she thought of using predictive analytics in her agency. “It scares the hell out of me… I think about how we are impacting and infringing on people’s civil liberties,” she replied. She added that she ran an agency “that exclusively serves black and brown children and families” and expressed her concern about “widening the net under the guise that we are going to help them.”

Months later, Zymere Perkins was murdered by his stepfather. Perkins was a six-year-old child who was known to ACS through several child abuse and neglect allegations made to the New York Statewide Central Register of Child Abuse. Zymere’s mother had a history of severe neuropsychiatric Lupus and intimate partner violence when she met the man who would end up murdering her son. While living with Zymere in a Bronx shelter, multiple family members and staff raised concerns about her ability to care for the child. ACS came and went without providing any help. The mother needed serious psychiatric support, stable housing, and potentially for Zymere to live with a relative. But these are not the concerns of family cops. They are not trained therapists; they cannot provide affordable housing or medical treatment.

In the ensuing public firestorm, Carrión resigned. The new commissioner, David Hansell, promised to transform ACS to ensure that a tragedy like the Perkins murder would never happen again. Rather than reimagine a system that could ensure families like Perkins’ could have survived, however, the new leadership saw his death as proof that all poor Black families are definitionally at risk of murdering their children. Hansell shifted the agency’s resources to identifying and managing this risk, and turned to algorithmic tools to do so.

Shortly after becoming ACS commissioner in 2017, Hansell commissioned academics from NYU and CUNY to build a predictive risk model for the agency that would identify the likelihood of any given family who catches an ACS case being the subject of another investigation within a six-month period. One of the developers, Ravi Shroff, wrote a paper lamenting the relative scarcity of training data, comparing the approximately 55,000 calls ACS receives each year to the several million calls New York City 911 operators receive each year.

In the wake of the initial development of the predictive risk model, ACS released a “concept paper” emphasizing data collection as a key deliverable for nonprofit agencies like MercyFirst that enter into a contractual agreement with ACS. This highlights how splashy policy reports and white papers are not what principally shapes the implementation of data collection by child welfare agencies. Rather, phrenological tech or datafication is driven by stipulations in funding instruments, which often require the documentation of the behavior of families using specific proprietary software, as well as uploading that data to specific city and state data warehouses.

How is the data collected? The nonprofits that are contracted out by ACS to provide prevention services (typically the same non-profits contracted out to do foster care) are given tablets that caseworkers use to collect data during home visits. These tablets are loaded with a subscription software called Safe Measures Dashboard, featuring low-tech “decision aids” such as Structured Decision Making (SDM) and tools for basic demographic data collection, which can then expand the training data for the agency’s predictive risk models.

The software provides a series of structured prompts for caseworkers to enter their data in an attempt to get “higher quality” or “structured data.” Many of the shorthand, rushed notes traditionally taken by caseworkers are deemed unusable by rigid algorithms that cannot make sense of it. Like many devices composing the internet of things (IoT), the tablet includes a GPS sensor that tracks the caseworker’s movements over time in order to detect inefficiency—whether the caseworker is taking too long with a house visit, for example. Surveillance extends not only to the family under investigation, but also to the caseworker tasked with monitoring them. Finally, the data gathered in the tablet is uploaded to a series of city and state data warehouses where it can potentially be used to train machine learning models.

The little oversight of this system that exists is not tied to any kind of enforcement mechanism. Should a family have a meaningful complaint about being labeled high-risk, or having their data used to train a machine learning model without their consent, there’s currently nothing they can do. These systems have been piloted on the most marginalized, but agencies have been relentlessly building up their capacity to unleash these tools on the rest of society. Historically, family policing and experiments with automated decision making systems have been conducted on the most marginalized in society, but rates of investigation on whiter and more middle-class people are increasing. Agencies like ACS promise that they won’t predictively model the likelihood of abuse on the entire population of children in the city they operate in. However, this is just an empty promise and there’s no reason to believe they won’t, given their capacity to do so.

If I had brought Jasyn back to MercyFirst upon the supervisor’s request, that encounter would have produced administrative data that would legitimize an “order of removal” (the bureaucratic phrase used to describe family separation). Then, it is likely that the ACS investigators sent to our house would have “indicated” the case in the New York Statewide Central Register of Child Abuse. An indicated case would result in the immediate removal of my four nieces and nephews, who were still in foster care, and eventually of my son, who was not. All of these possibilities were documented and collected into ACS’s system of record, ensuring that, even if we escaped, our encounters with the system would remain. Data and predictive risk modeling is not something that exists outside obscene forms of analog violence; it is an inextricable part of it.