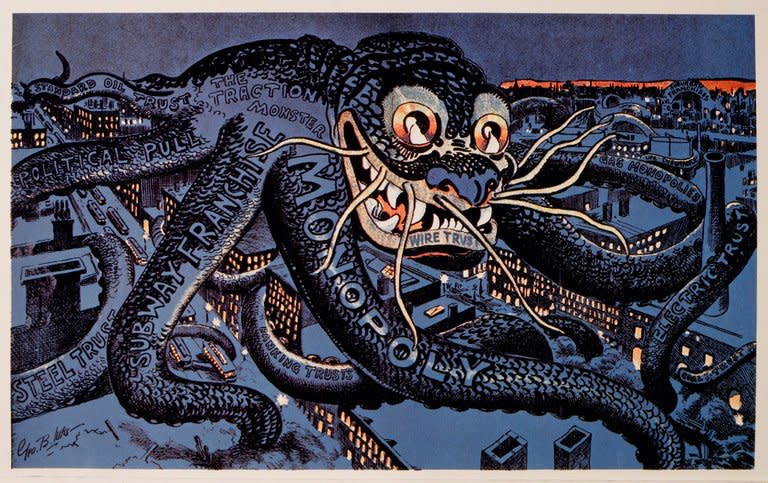

Over a hundred years ago, the upheavals of industrialization gave rise to what Louis Brandeis famously called the “curse of bigness.” The modern industrial economy increasingly came to depend on new technologies and infrastructures: railroads, modern finance, oil, and the like. Yet these infrastructures remained largely in private hands.

For Brandeis and his fellow Progressive Era reformers, the result was a fundamental threat to liberty, opportunity, and democracy. By virtue of their control over these foundational goods and services, private firms could extract greater profits from the public. If the essence of arbitrary, authoritarian governance was the concentration of unchecked power, then these firms represented a profoundly oligarchical mode of social order, where the public good remained dependent on the will and whims of chairmans and chief executives.

A hundred years later, the fear of bigness is with us once again—from the aftermath of the 2008 financial crisis, which introduced “too big to fail” into the vernacular, to growing concerns about corporate concentration in healthcare, airlines, and innumerable other industries. But nowhere is this anxiety about modern-day bigness more alive—and more difficult to address—than in the domain of technology.

As in the Progressive Era, technological revolutions have radically transformed our social, economic, and political life. Technology platforms, big data, AI—these are the modern infrastructures for today’s economy. And yet the question of what to do about technology is fraught, for these technological systems paradoxically evoke both bigness and diffusion: firms like Amazon and Alphabet and Apple are dominant, yet the internet and big data and AI are technologies that are by their very nature diffuse.

The problem, however, is not bigness per se. Even for Brandeisians, the central concern was power: the ability to arbitrarily influence the decisions and opportunities available to others. Such unchecked power represented a threat to liberty. Therefore, just as the power of the state had to be tamed through institutional checks and balances, so too did this private power have to be contested—controlled, held to account.

This emphasis on power and contestation, rather than literal bigness, helps clarify the ways in which technology’s particular relationship to scale poses a challenge to ideals of democracy, liberty, equality—and what to do about it.

Taming the Beast

Starting in the late nineteenth century, scale became the central focus for Americans concerned about emerging forms of corporate power. But what’s notable about the intellectual ferment around scale is the degree to which these debates turned not on bigness, but rather on issues of power and contestability. How much and what kinds of power did these new corporate entities exercise? And how could these concentrations of power be minimized, contested, and held accountable?

Power, crucially, could manifest in “good” and “bad” forms. Just because a firm acted benevolently did not change the fact that it possessed power. The key, then, was not outcomes but potential: so long as the good effects of corporate power relied on the personal decisions of corporate leaders, rather than on institutionalized forms of accountability, it remained a threat.

Perhaps the most famous front in this debate was the clash between Brandeis and other progressives like Herbert Croly and Teddy Roosevelt. For Croly and Roosevelt, corporate bigness was a problem, but one that could be addressed through government oversight. For Brandeis, by contrast, concentrations of corporate power had to be broken up through measures like antitrust laws.

While this is usually seen as a clash between bigness skeptics like Brandeis and bigness optimists like Roosevelt, much of this debate boiled down to a different set of disagreements: not about how big the government should let a company be, but about the best way to contest corporate power and hold it accountable. Roosevelt and Croly believed in the social and economic value of these corporate giants, and had faith in the capacities of public-minded, expert regulators to manage these concentrations, extracting the public goods and minimizing the public evils.

Brandeis shared many of these views. He was after all a classic progressive, who celebrated the importance of expertise and the emerging regulatory state. Nevertheless, he expressed skepticism towards concentrated corporate power, which in his view necessarily spilled over into political power as well. Economic influence could so easily be leveraged into political influence that the more prudent route would be to reduce concentration itself.

Furthermore, Brandeis, like many classical liberals, saw the marketplace itself as a machine for enforcing checks and balances. Once cut down to size, firms would face the checks and balances imposed by market competition—and through competition, firms would be driven to serve the public good, to innovate, and to operate efficiently. By making markets more competitive, Brandeisian regulation would thus reduce the risk of arbitrary power.

Around the same time, the architects of modern corporate law were trying to solve the same problem from another angle. In 1932, Adolf Berle and Gardiner Means published The Modern Corporation and Private Property, a landmark work that laid the foundation for modern corporate governance. For many modern defenders of free markets, Berle and Means are seen as architects of the idea that shareholders and other market actors could hold corporate power accountable through the “discipline” of financial markets. This would defuse the problem of corporate power and assure the economically efficient allocation of capital.

But this is misleading, because Berle and Means shared the Brandeisian unease with corporate power. In fact, their analysis highlighted a serious obstacle to holding that power accountable: the structure of the modern firm. Since a public corporation was nominally “owned” by a diffuse and inchoate set of shareholders, they couldn’t effectively assert control over it.

The answer for some, like Berle, lay in the public-mindedness of corporate managers. Like the regulators of the early New Deal, they would approach their control of the firm in a spirit of public service and with a sense of social obligation. For others, this faith in managerial goodwill was misplaced, and the answer lay in more assertive forms of shareholder control.

Meanwhile, the rise of the labor movement and the consumer rights movement took a different approach altogether. Rather than trusting firm managers, shareholders, or even regulators to act on their own, contesting private power required building autonomous, grassroots groups like unions and consumer organizations that could exercise their own forms of countervailing power.

The problem of scale, then, has always been a problem of power and contestability. In both our political and our economic life, arbitrary power is a threat to liberty. The remedy is the institutionalization of checks and balances. But where political checks and balances take a common set of forms—elections, the separation of powers—checks and balances for private corporate power have proven trickier to implement.

These various mechanisms—regulatory oversight, antitrust laws, corporate governance, and the countervailing power of organized labor— together helped create a relatively tame, and economically dynamic, twentieth-century economy. But today, as technology creates new kinds of power and new kinds of scale, new variations on these strategies may be needed.

The Varieties of Corporate Power

Corporate power isn’t a monolith. Rather, as the progressive crusaders of the past century recognized, it comes in several different forms.

First, there is literal monopoly: the direct control over an entire good or industry by a single firm. But that’s only the most blatant kind of corporate power: there are other kinds of dominance that are far less obvious.

One of these is control over infrastructure. Infrastructure can mean many things. It can refer to physical infrastructure, like highways and bridges and railroads, or it can be social and economic: the credit that forms the lifeblood of business, for instance, or the housing stock and water supply that provide the foundational necessities for life.

These infrastructural goods and services combine scale with necessity. They are necessities that make possible a wide range of “downstream” uses. This social value in turn depends on the provision of these goods and services at scale to as many people as possible. Where a good or a service is essential and irreplaceable, the user depends on its provider—they are, by definition, in a vulnerable position. So if a firm controls infrastructure, it possesses arbitrary power over all those who rely on the infrastructure.

But infrastructural power can also operate in a more diffused way. Much of the early debate around corporate power revolved around norms of nondiscrimination in serving travelers. The classic example was the innkeeper. The innkeeper is not a monopolist in the sense of massive scale and concentration. And yet, for the traveler in isolation, without other competing providers present, the innkeeper possesses a kind of localized dominance, with the ability to deny or condition service, placing the traveler at the innkeeper’s mercy. Indeed, this understanding of localized power played a major role in the development of public accommodations laws, which aimed to prevent this kind of diffused exclusion through generally applicable requirements of nondiscrimination.

Transmission, Gatekeeping, Scoring

A century after the debate between Brandeis and Croly, our technologically transformed economy poses new challenges. For much of the last few decades, these challenges were hidden from view: technology seemed to be at worst neutral and at best radically decentralizating, democratizing, and value-generating.

Recently, however, this optimism has begun to unravel. The problems of technology have come into sharper focus. But this has brought difficulties of its own: technological power today operates in distinctive ways that make it both more dangerous and potentially more difficult to contest.

First, there is transmission power. This is the ability of a firm to control the flow of data or goods. Take Amazon: as a shipping and logistics infrastructure, it can be seen as directly analogous to the railroads of the nineteenth century, which enjoyed monopolized mastery over the circulation of people, information, and commodities. Amazon provides the literal conduits for commerce.

On the consumer side, this places Amazon in a unique position to target prices and influence search results in ways that maximize its returns, and also favor its preferred producers. On the producer side, Amazon can make or break businesses and whole sectors, just like the railroads of yesteryear. Book publishers have long voiced concern about Amazon’s dominance, but this infrastructural control now extends to other kinds of retail activity, as third-party producers and sellers depend on Amazon to carry their products and to fairly reflect them in consumer searches.

As some studies indicate, Amazon will often deploy its vast trove of consumer data to identify successful third-party products which it can then displace through its own branded versions, priced at predatorily low levels to drive out competition. This is also the kind of infrastructural power exercised by internet service providers (ISPs) in the net neutrality context, through their control of the channels of data transmission. Their dominance raises similar concerns: just as Amazon can use its power to prevent producers from reaching consumers, ISPs can block, throttle, or prioritize preferred types of information.

A second type of power arises from what we might think of as a gatekeeping power. Here, the issue is not necessarily that the firm controls the entire infrastructure of transmission, but rather that the firm controls the gateway to an otherwise decentralized and diffuse landscape.

This is one way to understand the Facebook News Feed, or Google Search. Google Search does not literally own and control the entire internet. But it is increasingly true that for most users, access to the internet is mediated through the gateway of Google Search or YouTube’s suggested videos. By controlling the point of entry, Google exercises outsized influence on the kinds of information and commerce that users can ultimately access—a form of control without complete ownership.

Crucially, gatekeeping power subordinates two kinds of users on either end of the “gate.” Content producers fear hidden or arbitrary changes to the algorithms for Google Search or the Facebook News Feed, whose mechanics can make the difference between the survival and destruction of media content producers. Meanwhile, end users unwittingly face an informational environment that is increasingly the product of these algorithms—which are optimized not to provide accuracy but to maximize user attention spent on the site. The result is a built-in incentive for platforms like Facebook or YouTube to feed users more content that confirms preexisting biases and provide more sensational versions of those biases, exacerbating the fragmentation of the public sphere into different “filter bubbles.”

These platforms’ gatekeeping decisions have huge social and political consequences. While the United States is only now grappling with concerns about online speech and the problems of polarization, radicalization, and misinformation, studies confirm that subtle changes—how Google ranks search results for candidates prior to an election, for instance, or the ways in which Facebook suggests to some users rather than others that they vote on Election Day—can produce significant changes in voting behavior, large enough to swing many elections.

A third kind of power is scoring power, exercised by ratings systems, indices, and ranking databases. Increasingly, many business and public policy decisions are based on big data-enabled scoring systems. Thus employers will screen potential applicants for the likelihood that they may quit, be a problematic employee, or participate in criminal activity. Or judges will use predictive risk assessments to inform sentencing and bail decisions.

These scoring systems may seem objective and neutral, but they are built on data and analytics that bake into them existing patterns of racial, gender, and economic bias. For example, employers might screen out women likely to become pregnant or people of color who already are disproportionately targeted by the criminal justice system. This allows firms to engage in a kind of employment discrimination that would normally be illegal if it took place in the workplace itself. But these scoring systems allow for screening even before the employer is involved in a face-to-face interaction with the candidate.

Scoring power is not a new phenomenon. Consider the way that financial firms gamed the credit ratings agencies to mark toxic mortgage backed assets as “AAA,” enabling them to extract immense profits while setting up the world economy for the 2008 financial crisis. But what big data and the proliferation of AI enable is the much wider use of similarly flawed scoring systems. As these systems become more widespread, their power—and risk—magnifies.

Each of these forms of power is infrastructural. Their impact grows as more and more goods and services are built atop a particular platform. They are also more subtle than explicit control: each of these types of power enable a firm to exercise tremendous influence over what might otherwise look like a decentralized and diffused system.

This is the paradox of technological power in a networked age. Where a decade or two ago, these technologies may have seemed intrinsically decentralizing, they have in fact enabled new forms of concentrated power and control through transmission, gateways, and scoring. These forms of power, furthermore, often operate in the background, opaque and hidden from view. This makes them harder to challenge and contest.

Inside Voices

If the problem with scale is less literal size and more these forms of concentrated, unaccountable power, then what are the remedies? As technology creates new kinds of infrastructural power, we need to develop new tools for holding that power accountable.

We can imagine a few different strategies. First, we might turn, like Berle and Means, to the internal politics of technological firms themselves. We might push for the creation of independent oversight and ombudsman bodies within Facebook, Google, or other tech platforms.

For these bodies to be effective and legitimate, however, they would need to have significant autonomy and independence. They would also need to be relatively participatory, engaging a wider range of disciplines and stakeholders in their operations. Precisely because of the infrastructural nature of their power, technology platforms affect a wide range of groups: workers within the firms, consumers more broadly, small businesses and producers, media companies, and the like. Any attempt to create internal checks and balances will have to find ways to engage these different constituencies and provide them with a channel through which to raise concerns and flag problems to be resolved.

A related idea might be the formation of more explicit professional and industry standards of conduct. It is not a coincidence that the professionalization of journalism emerged around the same time as print and broadcast media took a more concentrated form. Professionalization helped legitimate the industry’s growing dominance.

This mid-century custodial ethos is notably different from the Silicon Valley culture of “moving fast and breaking things.” Changing this culture will be difficult, but not impossible. Tech firms might prioritize a wider range of professional backgrounds outside of tech, engineering, and finance when developing internal leaders. The training of engineers could also incorporate a wider range of influences—ethics, sociology, history—whether through changes to the curriculum in leading university programs or through the formation of professional development practices within the industry.

These cultural and curricular shifts could be facilitated by third-party scoring systems. We need more independent nonprofit organizations with sufficient resources, technological sophistication, and public legitimacy to provide independent scoring of tech platforms’ data, privacy, and AI practices—similar to the LEED program that certifies green building practices.

A New Deal for Big Tech

Another route would be to follow the example of Teddy Roosevelt and the New Deal itself. To the extent that we doubt the efficacy and independence of self-regulation, we might create new government institutions for oversight. These agencies would have to leverage interdisciplinary expertise in data, law, ethics, sociology, and other fields in order to monitor and manage the activities of technological infrastructure whether in their transmission, gatekeeping, or scoring forms.

Along these lines, several scholars have suggested the formation of regulatory bodies to assess algorithms, the use of big data, search engines, and the like, subjecting them to risk assessments, audits, and some form of public participation. Government oversight could attempt to ensure that firms respect values like nondiscrimination, neutrality, common carriage, due process, and privacy. These regulatory institutions would monitor compliance and continue to revise standards over time.

Yet both self-governance and regulatory oversight depend to some degree on the human capacities of the overseers, whether private or public. Call these managerial strategies for checking concentrated power. The problem with managerialism is that even if we built a powerful, independent, and accountable public (or private) oversight regime, it would face the difficulties endured by any regulator of a complex system: industry is likely to be several steps ahead of government, especially if it is incentivized to seek returns by bypassing regulatory constraints. Furthermore, the efficacy of regulation will turn entirely on the skill, commitment, creativity, and independence of regulators themselves.

A more radical response, then, would be to impose structural restraints: limits on the structure of technology firms, their powers, and their business models, to forestall the dynamics that lead to the most troubling forms of infrastructural power in the first place.

One solution would be to convert some of these infrastructures into “public options”—publicly managed alternatives to private provision. Run by the state, these public versions could operate on equitable, inclusive, and nondiscriminatory principles. Public provision of these infrastructures would subject them to legal requirements for equal service and due process. Furthermore, supplying a public option would put competitive pressures on private providers.

The public option solution is not a new one. Our modern-day public utilities, from water to electricity, emerged out of this very concern that certain kinds of infrastructure are too important to be left in private hands. This infrastructure doesn’t have to be physical: during the reform debate after the financial crisis, for example, there was a proposal to provide a public alternative to for-profit credit ratings agencies, to break the oligopoly of those ratings companies and their rampant conflicts of interest.

What would public options look like in a technological context? Municipally owned broadband networks can provide a public alternative to private ISPs, ensuring equitable access and putting competitive pressure on corporate providers. We might even imagine publicly owned search engines and social media platforms—perhaps less likely, but theoretically possible.

We can also introduce structural limits on technologies with the goal of precluding dangerous concentrations of power. While much of the debate over big data and privacy has tended to emphasize the concerns of individuals, we might view a robust privacy regime as a kind of structural limit: if firms are precluded from collecting or using certain types of data, that limits the kinds of power they can exercise.

Usually privacy concerns are framed as a matter of individual rights: the user’s privacy is invaded by firms collecting data. But if we take seriously the types of technological power sketched above, then privacy acquires a larger significance. It becomes not just a personal issue but a structural one: a way to limit the kinds of data that firms can collect, in turn reducing the risk of arbitrary and biased technological power. Such privacy rules can be achieved by legal mandate and regulation, or through proposed technological tools to deliberately corrupt some of the data that platforms collect on users.

Tax policy could also play a role. Some commentators have proposed a “big data tax” as another structural inhibitor of some kinds of big data and algorithmic uses. Just as a financial transactions tax would cut down on short-term speculation in the stock market, a big data tax would reduce the volume of data collected. Forcing companies to collect less data would structurally limit the kinds of risky or irresponsible uses to which such data can be directed.

Finally, antitrust-style restrictions on firms might reduce problematic conflicts of interest. For example, we might limit practices of vertical integration: Amazon might be forbidden from being both a platform and a producer of its own goods and content sold on its own platform, as a way of preventing the incentive to self-deal. Indeed, in some cases we might take a conventional antitrust route, and break up big companies into smaller ones.

Civic Scale

Creating public options or imposing structural limits would necessarily reduce tech industry profits. That is by design: the purpose of such measures is to prevent practices that, while they may bring some public benefits, are both risky and too difficult to manage effectively through public or private oversight.

Taming technological power will require changing how we think about technology. It will require moving beyond Panglossian views of technology as neutral, apolitical, or purely virtuous, and seeing it as a form of power. This focus on power highlights the often subtle ways that technology creates relationships of control and domination. It also raises a profound challenge to our modern ethic of technological innovation.

A key theme for Progressive Era critics of corporate power was the confrontation between the democratic capacities of the public and the powers of private firms. Today, as technology creates new forms of power, we must also create new forms of countervailing civic power. We must build a new civic infrastructure that imposes new kinds of checks and balances. But where new firms, however innovative, outstrip our ability to assure their accountability, then we have to ask hard questions about whether we want to pursue such innovation in the first place.

Moving fast and breaking things is inevitable in moments of change. The issue is which things we are willing to break—and how broken we are willing to let them become. Moving fast may not be worth it if it means breaking the things upon which democracy depends.